Using TALM to Update Clusters

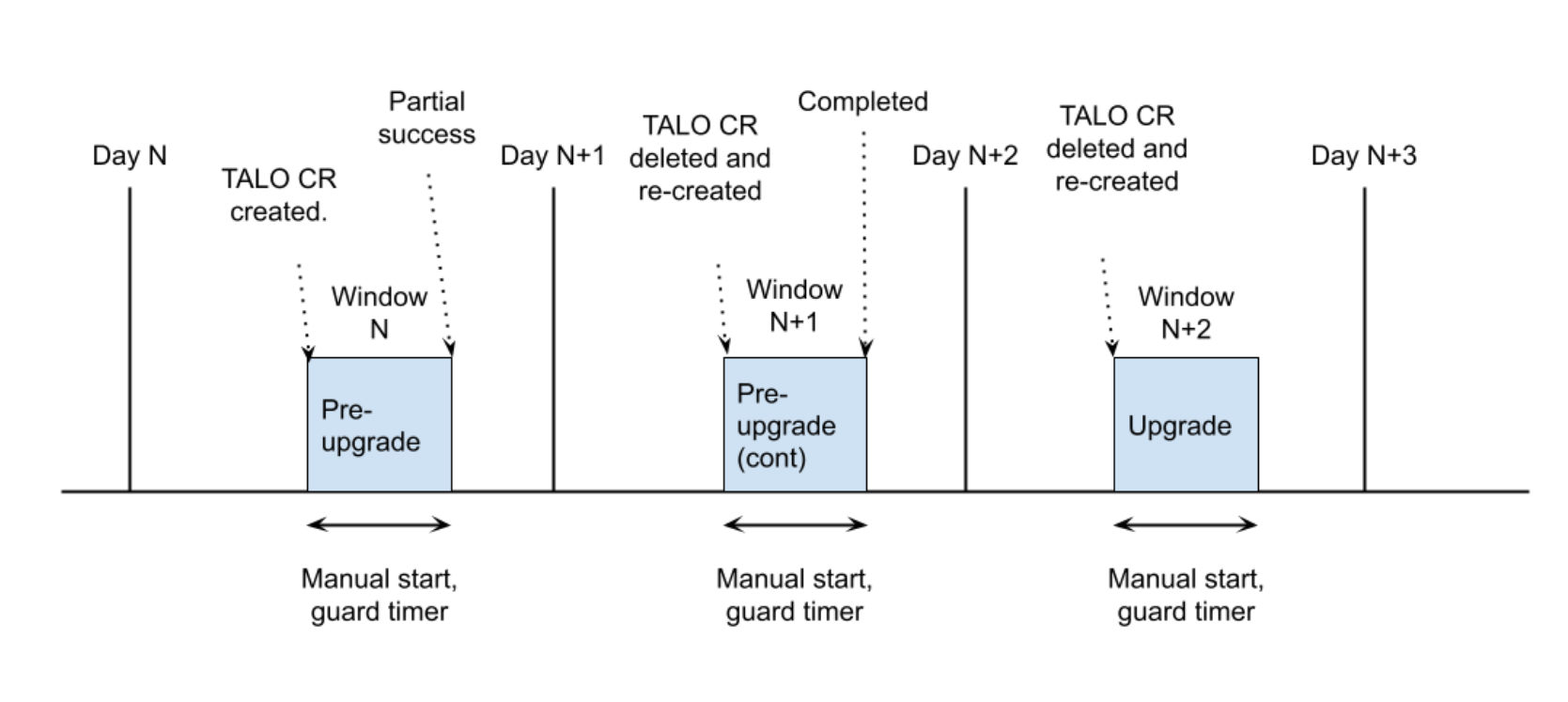

In this section, we will perform a platform upgrade to both managed clusters using the pre-cache and backup feature implemented in the Topology Aware Lifecycle Manager (TALM) operator. The pre-cache feature prepares the maintenance operation in the managed clusters by pulling the required artifacts prior to the upgrade. The reasoning behind this feature is that SNO spoke clusters may have limited bandwidth to the container registry, which will make it difficult for the upgrade to complete within the required time. In order to ensure the upgrade can fit within the maintenance window, the required artifacts need to be present on the spoke cluster prior to the upgrade. The idea is pre-caching all the images needed for the platform and operator upgrade on the node, so they are not pulled at upgrade time. Do it in a maintenance window(s) before the upgrade maintenance window.

The backup feature, on the other hand, implements a procedure for rapid recovery of a SNO in the event of a failed upgrade that is unrecoverable. The SNO needs to be restored to a working state with the previous version of OCP without requiring a re-provision of the application(s).

| The backup feature only allows SNOs to be restored, this is not applicable to any other kind of OpenShift clusters. |

Let’s upgrade our both clusters. First of all, let’s verify the TALM operator is running in our hub cluster:

Verifying the TALM state

| Below commands must be executed from the workstation host if not specified otherwise. |

oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig get operatorsNAME AGE

advanced-cluster-management.open-cluster-management 16h

lvms-operator.openshift-storage 16h

multicluster-engine.multicluster-engine 16h

openshift-gitops-operator.openshift-operators 16h

topology-aware-lifecycle-manager.openshift-operators 16hNext, double check there is no problem with the Pod. Notice that the name of the Pod is cluster-group-upgrade-controller-manager, based on the name of the upstream project Cluster Group Upgrade Operator.

oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig get pods,sa,deployments -n openshift-operatorsNAME READY STATUS RESTARTS AGE

pod/cluster-group-upgrades-controller-manager-75b967b749-qlzn8 2/2 Running 0 3h3m

pod/gitops-operator-controller-manager-7b6b8967b8-4f8rx 1/1 Running 0 3h6m

NAME SECRETS AGE

serviceaccount/builder 1 3h17m

serviceaccount/cluster-group-upgrades-controller-manager 1 3h3m

serviceaccount/cluster-group-upgrades-operator-controller-manager 1 3h3m

serviceaccount/default 1 3h43m

serviceaccount/deployer 1 3h17m

serviceaccount/gitops-operator-controller-manager 1 3h6m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cluster-group-upgrades-controller-manager 1/1 1 1 3h3m

deployment.apps/gitops-operator-controller-manager 1/1 1 1 3h6mFinally, let’s take a look at the cluster group upgrade (CGU) CRD managed by TALM. If we pay a closer look we will notice that an already completed CGU was applied to SNO2. As we mentioned in inform policies section, all policies are not enforced, the user has to create the proper CGU resource to enforce them. However, when using ZTP, we want our cluster provisioned and configured automatically. This is where TALM will step through the set of created policies (inform) and will enforce them once the cluster was successfully provisioned. Therefore, the configuration stage starts without any intervention ending up with our OpenShift cluster ready to process workloads.

It’s possible that you get UpgradeNotCompleted, if that’s the case you need to wait for the remaining policies to be applied. You can check policies status here.

|

oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig get cgu sno2 -n ztp-installNAMESPACE NAME AGE STATE DETAILS

ztp-install sno2 79m Completed All clusters are compliant with all the managed policiesGetting the SNO clusters kubeconfigs

In the previous sections we have deployed the sno2 cluster and attached the sno1 cluster. Before we continue with TALM, let’s grab the kubeconfigs for both cluster since we will need them for the next sections.

oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig -n sno1 extract secret/sno1-admin-kubeconfig --to=- > ~/5g-deployment-lab/sno1-kubeconfig

oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig -n sno2 extract secret/sno2-admin-kubeconfig --to=- > ~/5g-deployment-lab/sno2-kubeconfigCreating the upgrade PGT

Create an upgrade PGT in inform mode, as usual, that will apply and upgrade the SNOs located in Europe (binding rule: du-zone: "europe"), SNO1 and SNO2 clusters. This file needs to be created in the ztp-repository Git repo that we have created.

cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/zone-europe-upgrade-414-1.yaml

---

apiVersion: ran.openshift.io/v1

kind: PolicyGenTemplate

metadata:

name: "europe-snos-upgrade"

namespace: "ztp-policies"

spec:

bindingRules:

du-zone: "europe"

logicalGroup: "active"

mcp: "master"

remediationAction: inform

sourceFiles:

- fileName: ClusterVersion.yaml

policyName: "version-414-1"

metadata:

name: version

spec:

channel: "stable-4.14"

desiredUpdate:

force: false

version: "4.14.1"

image: "infra.5g-deployment.lab:8443/openshift/release-images@sha256:05ba8e63f8a76e568afe87f182334504a01d47342b6ad5b4c3ff83a2463018bd"

status:

history:

- version: "4.14.1"

state: "Completed"

EOFModify the kustomization.yaml inside the site-policies folder, so it includes this new PGT and eventually will be applied by ArgoCD.

If you’re using MacOS and you’re getting errors while running sed -i commands, make sure you are using gsed. If you do not have it available, please install it: brew install gnu-sed.

|

sed -i "/- group-du-sno.yaml/a \ \ - zone-europe-upgrade-414-1.yaml" ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/kustomization.yamlThen commit all the changes:

cd ~/5g-deployment-lab/ztp-repository/

git add site-policies/fleet/active/zone-europe-upgrade-414-1.yaml site-policies/fleet/active/kustomization.yaml

git commit -m "adds upgrade policy"

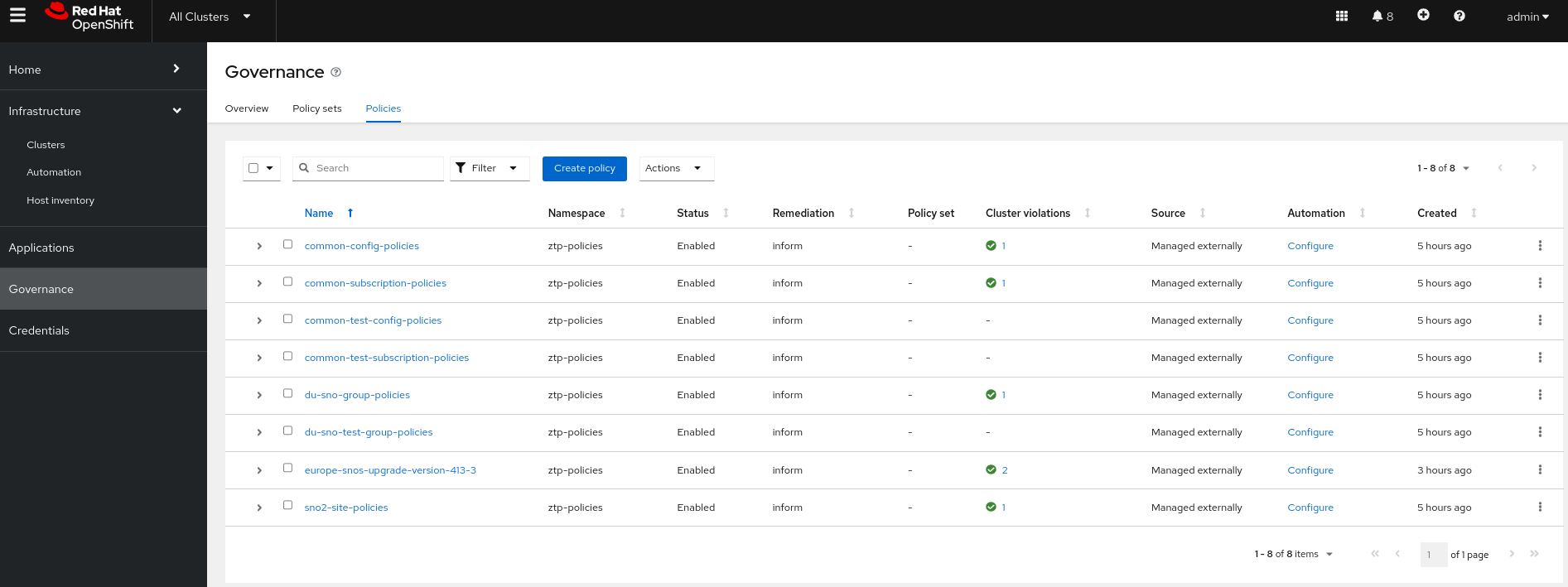

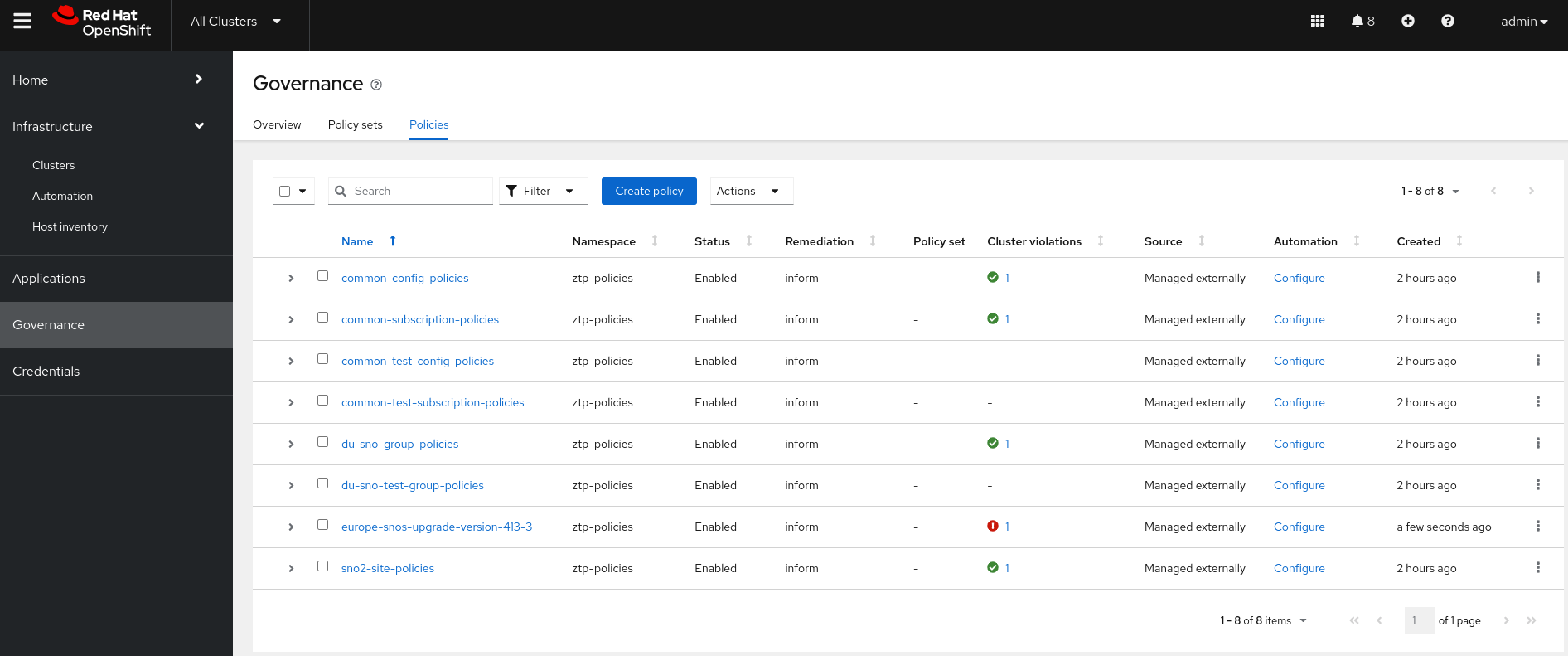

git push origin mainOnce committed, in a couple of minutes we will see a new policy in the multicloud RHACM console. As noticed, the policy named europe-snos-upgrade-version-414-1 has a violation. However, only one cluster is not compliant. If we check the policy information we will see that this policy is only targeting SNO2 cluster. That’s because the SNO1 cluster does not have the labels zone-europe and active logicalGroup that the PGT is targeting in its binding rule.

| Notice in the following picture that there are policies not applying to any cluster. Those are policies targeting the test environment. This is expected since we do not have any clusters in the test environment, e.g, no clusters are labeled with the proper label for testing: logicalGroup=test. |

Let’s add the proper labels to the production cluster SNO1:

oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig label managedcluster sno1 du-zone=europe logicalGroup=activemanagedcluster.cluster.open-cluster-management.io/sno1 labeledApplying the upgrade

At this point, we need to create a Cluster Group Upgrade (CGU) resource that will start the upgrade process. In our case, the process will be divided into two stages:

-

Run a pre-cache of the new OCP release prior to start the upgrade process.

-

Before running the upgrade, a backup will be done.

Backup and pre-cache

Let’s create the CGU. In this case, we will apply the managed policy (europe-snos-upgrade-version-414-1) to both clusters at the same time (maxConcurrency is 2). Notice that the CGU is disabled, this is suggested if we are going to run the precaching feature. This means, that once the precaching process is done we are ready to start the upgrade process by enabling the CGU. This idea is related to the compliance of a maintenance window in an enterprise.

Remember that several gigabytes of artifacts needs to be downloaded to the spoke for a full upgrade. SNO spoke clusters may have limited bandwidth to the hub cluster hosting the registry, which will make it difficult for the upgrade to complete within the required time. In order to ensure the upgrade can fit within the maintenance window, the required artifacts need to be present on the spoke cluster prior to the upgrade. Therefore, the process is split up into two stages as mentioned.

In OCP 4.14+, there is a new CRD called PreCachingConfig that will allow us to be more precise on the container images that we need for our cluster to upgrade. We must apply the {talm-precachingconfig-doc}[PreCachingConfig CR] before or concurrently with the CGU to our hub cluster:

You can obtain a more detailed list for excludePrecachePattern for each upgrade by following this KCS.

|

cat <<EOF | oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig apply -f -

---

apiVersion: ran.openshift.io/v1alpha1

kind: PreCachingConfig

metadata:

name: update-europe-snos

namespace: ztp-policies

spec:

overrides: {}

excludePrecachePatterns:

- agent-installer-

- alibaba-

- aws-

- azure-

- cloud-

- gcp-

- ibmcloud

- ibm-

- nutanix-

- openstack-

- ovirt-

- powervs-

- sdn

- vsphere-

- kuryr-

- csi-

- hypershift

additionalImages: []

---

apiVersion: ran.openshift.io/v1alpha1

kind: ClusterGroupUpgrade

metadata:

name: update-europe-snos

namespace: ztp-policies

spec:

preCaching: true

preCachingConfigRef:

name: update-europe-snos

namespace: ztp-policies

backup: true

clusters:

- sno1

- sno2

enable: false

managedPolicies:

- europe-snos-upgrade-version-414-1

remediationStrategy:

maxConcurrency: 2

timeout: 240

EOFOnce applied, we can see that the status moved to InProgress with a message detailing that the precaching process is in progress for both SNOs. This means that the first step in our process is executing the pre-cache.

oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig get cgu -ANAMESPACE NAME AGE STATE DETAILS

ztp-install local-cluster 3h8m Completed All clusters already compliant with the specified managed policies

ztp-install sno1 15m Completed All clusters already compliant with the specified managed policies

ztp-install sno2 86m Completed All clusters are compliant with all the managed policies

ztp-policies update-europe-snos 20s InProgress Precaching in progress for 2 clustersConnecting to any of our spoke clusters we can see a new job being created called pre-cache.

| Pre-cache job can take up to 5m to be created. |

oc --kubeconfig ~/5g-deployment-lab/sno2-kubeconfig -n openshift-talo-pre-cache get job pre-cacheNAME COMPLETIONS DURATION AGE

pre-cache 0/1 64s 64sThis job creates a Pod that will run the precache process. As we can see below, 183 images need to be downloaded from our local registry to mark the task as successful.

oc --kubeconfig ~/5g-deployment-lab/sno2-kubeconfig logs job/pre-cache -n openshift-talo-pre-cache -fhighThresholdPercent: 85 diskSize:209124332 used:17648032

upgrades.pre-cache 2023-11-21T10:55:10+00:00 DEBUG Release index image processing done

7df5fe3b5fb7352b870735c7d7bd898d0959a9a49558d2ffb42dcd269e01752f

upgrades.pre-cache 2023-11-21T10:55:10+00:00 DEBUG Operators index is not specified. Operators won't be pre-cached

upgrades.pre-cache 2023-11-21T10:55:10+00:00 DEBUG Pulling quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:00162a72c1ae283977f0191ee216e15fe696838b6d7addd8250ff8c5b474cc61 [1/183]

upgrades.pre-cache 2023-11-21T10:55:10+00:00 DEBUG Pulling quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:00eaf204536112ed09a10e4c70f9a8dc6827726bf9bc34f279a9156b881a7a2a [2/183]

upgrades.pre-cache 2023-11-21T10:55:10+00:00 DEBUG Pulling quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:02d61dfc59ac70096dffadda38a52829cd61c9c016e54d2b6d78eb5182d2b19a [3/183]

.

.

.

upgrades.pre-cache 2023-11-21T11:00:48+00:00 DEBUG Pulling quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:ff7cfeec16898c293222ac1422841440cdeffefa7d489757e71999d5305425f8 [182/183]

upgrades.pre-cache 2023-11-21T11:00:48+00:00 DEBUG Pulling quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:ff88f1a78dac067bb93d98818bcee9bed36de0b2a74f3ed42d15ad816a16f624 [183/183]

upgrades.pre-cache 2023-11-21T11:00:48+00:00 DEBUG Image pre-cache doneOnce the precache is done, the CGU state moves to NotEnabled and the Pod running the pre-cache task in both SNO clusters is deleted. At this moment, TALM is waiting for acknowledging the start of the upgrade.

| It can take up to 5 minutes for the CGU to report the new state. After that time, the precache objects and the openshift-talo-pre-cache namespace created in the managed clusters are automatically deleted. |

oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig get cgu -n ztp-policies update-europe-snosNAMESPACE NAME AGE STATE DETAILS

ztp-policies update-europe-snos 29m NotEnabled Not enabledTriggering the upgrade

Now that the pre-cache is done, we can trigger the update. As we said earlier, before the update is actually executed a backup will be done so we can rollback. In order to trigger the update we need to enable the CGU:

oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig patch cgu update-europe-snos -n ztp-policies --type merge --patch '{"spec":{"enable":true}}'Notice the CGU state moved to InProgress, which means, the upgrade process has started. In the details you can see that the backup is in progress.

oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig get cgu -n ztp-policies update-europe-snosNAMESPACE NAME AGE STATE DETAILS

ztp-policies update-europe-snos 30m InProgress Backup in progress for 2 clustersConnecting to any of our spoke clusters we can see a new job being created called backup-agent.

| Backup job can take up to 5m to be created. |

oc --kubeconfig ~/5g-deployment-lab/sno1-kubeconfig get jobs -ANAMESPACE NAME COMPLETIONS DURATION AGE

assisted-installer assisted-installer-controller 1/1 26m 21h

openshift-image-registry image-pruner-28140480 1/1 5s 11h

openshift-operator-lifecycle-manager collect-profiles-28141110 1/1 5s 34m

openshift-operator-lifecycle-manager collect-profiles-28141125 1/1 4s 19m

openshift-operator-lifecycle-manager collect-profiles-28141140 1/1 4s 4m50s

openshift-talo-backup backup-agent 0/1 7s 7sThis job basically runs a Pod that will execute a recovery procedure and will store all required data into the /var/recovery folder of each spoke.

oc --kubeconfig ~/5g-deployment-lab/sno2-kubeconfig logs job/backup-agent -n openshift-talo-backup -fINFO[0000] ------------------------------------------------------------

INFO[0000] Cleaning up old content...

INFO[0000] ------------------------------------------------------------

INFO[0000] Old directories deleted with contents

INFO[0000] Old contents have been cleaned up

INFO[0000] Available disk space : 154.98 GiB; Estimated disk space required for backup: 276.55 MiB

INFO[0000] Sufficient disk space found to trigger backup

INFO[0000] Upgrade recovery script written

INFO[0000] Running: bash -c /var/recovery/upgrade-recovery.sh --take-backup --dir /var/recovery

INFO[0000] ##### Wed Jul 5 10:57:53 UTC 2023: Taking backup

INFO[0000] ##### Wed Jul 5 10:57:53 UTC 2023: Wiping previous deployments and pinning active

INFO[0000] error: Out of range deployment index 1, expected < 1

INFO[0000] Deployment 0 is now pinned

INFO[0000] ##### Wed Jul 5 10:57:54 UTC 2023: Backing up container cluster and required files

INFO[0000] Certificate /etc/kubernetes/static-pod-certs/configmaps/etcd-serving-ca/ca-bundle.crt is missing. Checking in different directory

INFO[0000] Certificate /etc/kubernetes/static-pod-resources/etcd-certs/configmaps/etcd-serving-ca/ca-bundle.crt found!

INFO[0000] found latest kube-apiserver: /etc/kubernetes/static-pod-resources/kube-apiserver-pod-7

INFO[0000] found latest kube-controller-manager: /etc/kubernetes/static-pod-resources/kube-controller-manager-pod-11

INFO[0000] found latest kube-scheduler: /etc/kubernetes/static-pod-resources/kube-scheduler-pod-8

INFO[0000] found latest etcd: /etc/kubernetes/static-pod-resources/etcd-pod-3

INFO[0000] aa91d2b9d4ac8ef60ea81f11643fdaad14717e21a7a4f82b57f59667a02c92af

INFO[0000] etcdctl version: 3.5.6

INFO[0000] API version: 3.5

INFO[0000] {"level":"info","ts":"2023-11-21T10:57:54.421Z","caller":"snapshot/v3_snapshot.go:65","msg":"created temporary db file","path":"/var/recovery/cluster/snapshot_2023-07-05_105754__POSSIBLY_DIRTY__.db.part"}

INFO[0000] {"level":"info","ts":"2023-11-21T10:57:54.434Z","logger":"client","caller":"v3@v3.5.6/maintenance.go:212","msg":"opened snapshot stream; downloading"}

INFO[0000] {"level":"info","ts":"2023-11-21T10:57:54.434Z","caller":"snapshot/v3_snapshot.go:73","msg":"fetching snapshot","endpoint":"https://192.168.125.40:2379"}

INFO[0001] {"level":"info","ts":"2023-11-21T10:57:55.204Z","logger":"client","caller":"v3@v3.5.6/maintenance.go:220","msg":"completed snapshot read; closing"}

INFO[0001] {"level":"info","ts":"2023-11-21T10:57:55.290Z","caller":"snapshot/v3_snapshot.go:88","msg":"fetched snapshot","endpoint":"https://192.168.125.40:2379","size":"84 MB","took":"now"}

INFO[0001] {"level":"info","ts":"2023-11-21T10:57:55.290Z","caller":"snapshot/v3_snapshot.go:97","msg":"saved","path":"/var/recovery/cluster/snapshot_2023-07-05_105754__POSSIBLY_DIRTY__.db"}

INFO[0001] Snapshot saved at /var/recovery/cluster/snapshot_2023-07-05_105754__POSSIBLY_DIRTY__.db

INFO[0001] Deprecated: Use `etcdutl snapshot status` instead.

INFO[0001]

INFO[0001] {"hash":1007949646,"revision":43716,"totalKey":10582,"totalSize":83578880}

INFO[0001] snapshot db and kube resources are successfully saved to /var/recovery/cluster

INFO[0002] Command succeeded: cp -Ra /etc/ /var/recovery/etc/

INFO[0002] Command succeeded: cp -Ra /usr/local/ /var/recovery/usrlocal/

INFO[0002] Command succeeded: cp -Ra /var/lib/kubelet/ /var/recovery/kubelet/

INFO[0002] ##### Wed Jul 5 10:57:55 UTC 2023: Backup complete

INFO[0002] ------------------------------------------------------------

INFO[0002] backup has successfully finished ...Once backups are finished for all clusters, the CGU state will move to BackupCompleted and then quickly move to InProgress:

oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig get cgu -ANAMESPACE NAME AGE STATE DETAILS

ztp-install sno2 4h9m Completed All clusters are compliant with all the managed policies

ztp-policies update-europe-snos 28m BackupCompleted Backup is completed for all clustersAt this point, if we connect to any of our spoke clusters we can see that the upgrade process is actually taking place.

oc --kubeconfig ~/5g-deployment-lab/sno2-kubeconfig get clusterversion,nodesNAME VERSION AVAILABLE PROGRESSING SINCE STATUS

clusterversion.config.openshift.io/version 4.14.0 True True 30s Working towards 4.14.1: 101 of 842 done (11% complete), waiting on kube-apiserver

NAME STATUS ROLES AGE VERSION

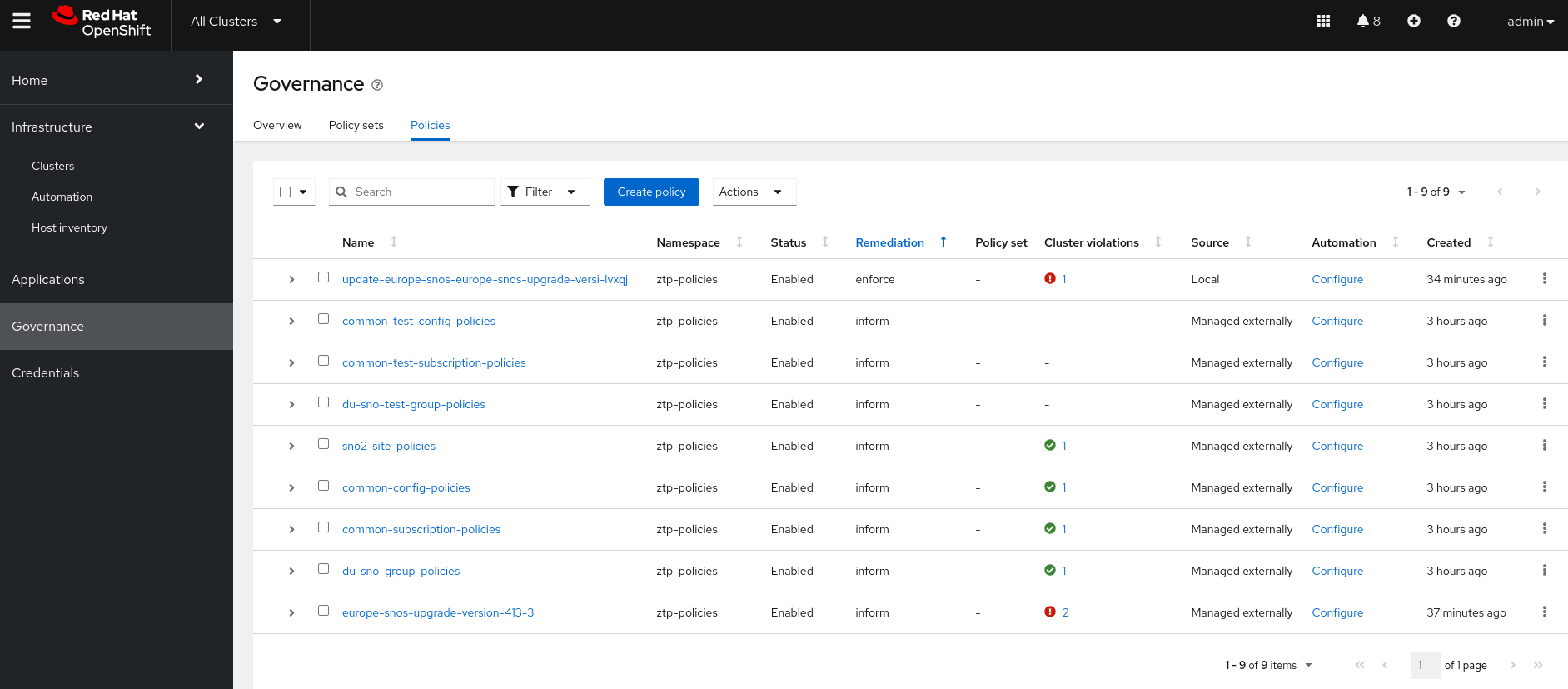

node/sno2.5g-deployment.lab Ready control-plane,master,worker 156m v1.27.6+f67aeb3Meanwhile, the clusters are upgrading we can take a look at the multicloud console and see that there is a new policy in enforce mode:

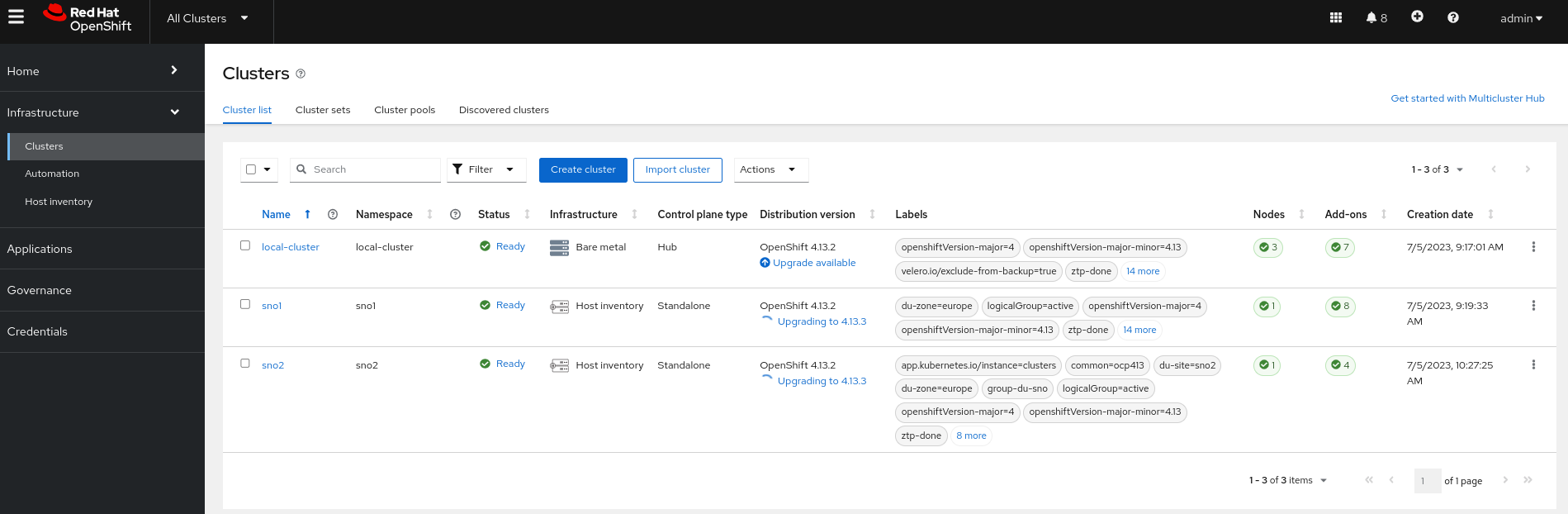

Moving to the Infrastructure → Cluster section of the multicloud console we can also graphically see the upgrading of both clusters:

Finally, our clusters are upgraded:

oc --kubeconfig ~/5g-deployment-lab/sno2-kubeconfig get clusterversion,nodesNAME VERSION AVAILABLE PROGRESSING SINCE STATUS

clusterversion.config.openshift.io/version 4.14.1 True False 6m8s Cluster version is 4.14.1

NAME STATUS ROLES AGE VERSION

node/sno2.5g-deployment.lab Ready control-plane,master,worker 3h26m v1.27.6+f67aeb3oc --kubeconfig ~/5g-deployment-lab/sno1-kubeconfig get clusterversion,nodesNAME VERSION AVAILABLE PROGRESSING SINCE STATUS

clusterversion.config.openshift.io/version 4.14.1 True False 9m12s Cluster version is 4.14.1

NAME STATUS ROLES AGE VERSION

node/openshift-master-0 Ready control-plane,master,worker 22h v1.27.6+f67aeb3Notice that now the upgrade policy europe-snos-upgrade-version-414-1 is now compliant on both clusters. See that, in order to save resources, the enforce policy is removed once the CGU is successfully applied.

And finally, the CGU will be Completed:

oc --kubeconfig ~/5g-deployment-lab/hub-kubeconfig get cgu -ANAMESPACE NAME AGE STATE DETAILS

ztp-install local-cluster 23h Completed All clusters already compliant with the specified managed policies

ztp-install sno1 67m Completed All clusters already compliant with the specified managed policies

ztp-install sno2 3h5m Completed All clusters are compliant with all the managed policies

ztp-policies update-europe-snos 63m Completed All clusters are compliant with all the managed policies