Crafting the Telco RAN Reference Design Specification

In the previous section, the ZTP GitOps pipeline was put in place to deploy our Infrastructure as Code (IaC) and Configuration as Code (CaC). Here, we are going to focus on creating the Configuration as Code (CaC) for the OpenShift clusters that will be installed in the following sections. The configuration of the Telco RAN Reference Design Specification is applied using multiple PolicyGenTemplate objects.

We described how PolicyGenTemplate works in detail here, so let’s jump directly to the creation of the different templates.

| The configuration defined through these PolicyGenTemplate CRs is only a subset of what was described in Introduction to Telco Related Infrastructure Operators. This is for clarity and tailored to the lab environment. A full production environment for supporting telco 5G vRAN workloads would have additional configuration not included here, but described in detail as the v4.18 Telco RAN Reference Design Specification in the official documentation. |

| Below commands must be executed from the infrastructure host if not specified otherwise. |

Crafting Common Policies

The common policies apply to every cluster in our infrastructure that matches our binding rule. These policies are often used to configure things like CatalogSources, operator deployments, etc. that are common to all our clusters.

These configs may vary from release to release, that’s why we create a common-418.yaml file. We will likely have a common configuration profile for each release we deploy.

If you check the binding rules, you see that we are targeting clusters labeled with common: "ocp418" and logicalGroup: "active". These labels are set in the ClusterInstance definition.

|

-

Create the

commonPolicyGenTemplate for v4.18 SNOs:cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/common-418.yaml --- apiVersion: ran.openshift.io/v1 kind: PolicyGenTemplate metadata: name: "common" namespace: "ztp-policies" spec: bindingRules: common: "ocp418" logicalGroup: "active" mcp: master remediationAction: inform sourceFiles: - fileName: DefaultCatsrc.yaml metadata: name: redhat-operator-index spec: image: infra.5g-deployment.lab:8443/redhat/redhat-operator-index:v4.18-1742933492 policyName: config-policy - fileName: ReduceMonitoringFootprint.yaml policyName: config-policy - fileName: StorageLVMSubscriptionNS.yaml metadata: annotations: workload.openshift.io/allowed: management policyName: subscriptions-policy - fileName: StorageLVMSubscriptionOperGroup.yaml policyName: subscriptions-policy - fileName: StorageLVMSubscription.yaml spec: channel: stable-4.18 source: redhat-operator-index installPlanApproval: Automatic policyName: subscriptions-policy - fileName: LVMOperatorStatus.yaml policyName: subscriptions-policy - fileName: SriovSubscriptionNS.yaml policyName: "subscriptions-policy" - fileName: SriovSubscriptionOperGroup.yaml policyName: "subscriptions-policy" - fileName: SriovSubscription.yaml spec: channel: stable source: redhat-operator-index config: env: - name: "DEV_MODE" value: "TRUE" installPlanApproval: Automatic policyName: "subscriptions-policy" - fileName: SriovOperatorStatus.yaml policyName: subscriptions-policy EOF

Crafting Group Policies

The group policies apply to a group of clusters that typically have something in common, for example they are SNOs, or they have similar hardware: SR-IOV cards, number of CPUs, number of disks, etc.

The CNF team has prepared some common tuning configurations that should be applied on every SNO DU deployed with similar hardware. In this section, we will be crafting these configurations.

If you check the binding rules you can see that we are targeting clusters labeled with group-du-sno: "", logicalGroup: "active" and hardware-type: "hw-type-platform-1". These labels are set in the ClusterInstance definition.

|

-

Create the

groupPolicyGenTemplate for SNOs:cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/group-du-sno.yaml --- apiVersion: ran.openshift.io/v1 kind: PolicyGenTemplate metadata: name: "du-sno" namespace: "ztp-policies" spec: bindingRules: group-du-sno: "" logicalGroup: "active" hardware-type: "hw-type-platform-1" mcp: master remediationAction: inform sourceFiles: - fileName: DisableSnoNetworkDiag.yaml policyName: "group-policy" - fileName: DisableOLMPprof.yaml # wave 10 policyName: "group-policy" - fileName: ConsoleOperatorDisable.yaml policyName: "group-policy" - fileName: SriovOperatorConfig.yaml policyName: "group-policy" # Using hub templating to obtain if the SR-IOV card is supported for this hw type spec: disableDrain: true enableOperatorWebhook: '{{hub fromConfigMap "" "group-hardware-types-configmap" (printf "%s-supported-sriov-nic" (index .ManagedClusterLabels "hardware-type")) | toBool hub}}' - fileName: StorageLVMCluster.yaml # Using hub templating to obtain the storage device name for this hw type spec: storage: deviceClasses: - name: vg1 thinPoolConfig: name: thin-pool-1 sizePercent: 90 overprovisionRatio: 10 deviceSelector: paths: - '{{hub fromConfigMap "" "group-hardware-types-configmap" (printf "%s-storage-path" (index .ManagedClusterLabels "hardware-type")) hub}}' policyName: "group-policy" - fileName: PerformanceProfile.yaml # Using hub templating to obtain if the tunning config for this hw type policyName: "group-policy" metadata: annotations: kubeletconfig.experimental: | {"topologyManagerScope": "pod", "systemReserved": {"memory": "3Gi"} } spec: cpu: isolated: '{{hub fromConfigMap "" "group-hardware-types-configmap" (printf "%s-cpu-isolated" (index .ManagedClusterLabels "hardware-type")) hub}}' reserved: '{{hub fromConfigMap "" "group-hardware-types-configmap" (printf "%s-cpu-reserved" (index .ManagedClusterLabels "hardware-type")) hub}}' hugepages: defaultHugepagesSize: '{{hub fromConfigMap "" "group-hardware-types-configmap" (printf "%s-hugepages-default" (index .ManagedClusterLabels "hardware-type"))| hub}}' pages: - count: '{{hub fromConfigMap "" "group-hardware-types-configmap" (printf "%s-hugepages-count" (index .ManagedClusterLabels "hardware-type")) | toInt hub}}' size: '{{hub fromConfigMap "" "group-hardware-types-configmap" (printf "%s-hugepages-size" (index .ManagedClusterLabels "hardware-type")) hub}}' numa: topologyPolicy: single-numa-node realTimeKernel: enabled: false globallyDisableIrqLoadBalancing: false # WorkloadHints defines the set of upper level flags for different type of workloads. # The configuration below is set for a low latency, performance mode. workloadHints: realTime: true highPowerConsumption: false perPodPowerManagement: false - fileName: TunedPerformancePatch.yaml policyName: "group-policy" spec: profile: - name: performance-patch data: | [main] summary=Configuration changes profile inherited from performance created tuned include=openshift-node-performance-openshift-node-performance-profile [sysctl] # When using the standard (non-realtime) kernel, remove the kernel.timer_migration override from the [sysctl] section # kernel.timer_migration=0 [scheduler] group.ice-ptp=0:f:10:*:ice-ptp.* group.ice-gnss=0:f:10:*:ice-gnss.* [service] service.stalld=start,enable service.chronyd=stop,disable EOFBy leveraging hub site templating we are reducing the number of policies on our hub cluster. This makes the clusters’s deployment and maintenance process simpler. Notice that with large fleets of clusters, it can quickly become hard to maintain per-site configurations. -

We’re using policy templating, so we need to create a

ConfigMapwith the templating values to be used. Notice that the following resource contains values for multiple hardware specifications that we may have in our infrastructure. In the policy we just applied, the values are obtained depending on the labelhardware-typethat each cluster is assigned to. This label is set in theClusterInstancedefinition. More information about Template processing can be found in Red Hat Advanced Cluster Management documentation.cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/group-hardware-types-configmap.yaml --- apiVersion: v1 kind: ConfigMap metadata: name: group-hardware-types-configmap namespace: ztp-policies annotations: argocd.argoproj.io/sync-options: Replace=true data: # PerformanceProfile.yaml hw-type-platform-1-cpu-isolated: "4-11" hw-type-platform-1-cpu-reserved: "0-3" hw-type-platform-1-hugepages-default: "1G" hw-type-platform-1-hugepages-count: "4" hw-type-platform-1-hugepages-size: "1G" hw-type-platform-1-supported-sriov-nic: "false" # StorageLVMCluster.yaml hw-type-platform-1-storage-path: "/dev/vdb" hw-type-platform-2-cpu-isolated: "2-39,42-79" hw-type-platform-2-cpu-reserved: "0-1,40-41" hw-type-platform-2-hugepages-default: "256M" hw-type-platform-2-hugepages-count: "16" hw-type-platform-2-hugepages-size: "1G" hw-type-platform-2-supported-sriov-nic: "true" hw-type-platform-2-storage-path: "/dev/nvme0n1" EOF -

Next, let’s include the DU validator policy crafted by the CNF team. It will let us know if the specific telco RAN RDS group policies are eventually applied.

cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/group-du-sno-validator.yaml --- apiVersion: ran.openshift.io/v1 kind: PolicyGenTemplate metadata: name: "du-sno-validator" namespace: "ztp-policies" spec: bindingRules: group-du-sno: "" logicalGroup: "active" bindingExcludedRules: ztp-done: "" mcp: "master" sourceFiles: - fileName: validatorCRs/informDuValidator.yaml remediationAction: inform policyName: "validation" EOF

Crafting Site Policies

Site policies apply to a specific cluster or clusters in our site. They usually configure stuff that is very specific to a single cluster or to a small subset of clusters. For example, we could configure the SR-IOV network devices for a group of clusters if those share the same number of network interfaces, NIC model, etc. otherwise we will need to have different bindings for different clusters.

We are going to create the SR-IOV network device configurations for the OpenShift cluster whose hardware type belongs to hw-type-platform-1 (see the bindingRule). That sort of servers are built with 2 virtual Intel IGB capable SR-IOV network devices. Notice that this policy leverages hub side templating. Therefore, it is targeting all clusters with this specific hardware configuration, not only one cluster.

-

Create the

du-sno-sitesPolicyGenTemplate for the clusters that containhw-type-platform-1hardware type in our5glabsite.cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/sites/hub-1/sites-specific.yaml --- apiVersion: ran.openshift.io/v1 kind: PolicyGenTemplate metadata: name: "du-sno-sites" namespace: "ztp-policies" spec: bindingRules: common: "ocp418" logicalGroup: "active" hardware-type: "hw-type-platform-1" mcp: master remediationAction: inform sourceFiles: - fileName: SriovNetwork.yaml # Using hub templating to obtain the SR-IOV config of each SNO policyName: "sites-policy" metadata: name: "sriov-nw-du-netdev" spec: ipam: '{"type": "host-local","ranges": [[{"subnet": "192.168.100.0/24"}]],"dataDir": "/run/my-orchestrator/container-ipam-state-1"}' resourceName: '{{hub fromConfigMap "" "site-data-configmap" (printf "%s-resourcename1" .ManagedClusterName) hub}}' spoofChk: "off" trust: "on" - fileName: SriovNetworkNodePolicy.yaml policyName: "sites-policy" complianceType: mustonlyhave metadata: name: '{{hub fromConfigMap "" "site-data-configmap" (printf "%s-resourcename1" .ManagedClusterName) hub}}' spec: deviceType: netdevice needVhostNet: false mtu: 1500 linkType: eth isRdma: false nicSelector: vendor: "8086" deviceID: "10c9" pfNames: - '{{hub fromConfigMap "" "site-data-configmap" (printf "%s-sriovnic1" .ManagedClusterName) hub}}' numVfs: 2 resourceName: '{{hub fromConfigMap "" "site-data-configmap" (printf "%s-resourcename1" .ManagedClusterName) hub}}' - fileName: SriovNetwork.yaml policyName: "sites-policy" metadata: name: "sriov-nw-du-vfio" spec: ipam: '{"type": "host-local","ranges": [[{"subnet": "192.168.100.0/24"}]],"dataDir": "/run/my-orchestrator/container-ipam-state-1"}' resourceName: '{{hub fromConfigMap "" "site-data-configmap" (printf "%s-resourcename2" .ManagedClusterName) hub}}' spoofChk: "off" trust: "on" - fileName: SriovNetworkNodePolicy.yaml policyName: "sites-policy" metadata: name: '{{hub fromConfigMap "" "site-data-configmap" (printf "%s-resourcename2" .ManagedClusterName) hub}}' spec: deviceType: vfio-pci mtu: 1500 linkType: eth isRdma: false needVhostNet: false nicSelector: vendor: "8086" deviceID: "10c9" pfNames: - '{{hub fromConfigMap "" "site-data-configmap" (printf "%s-sriovnic2" .ManagedClusterName) hub}}' numVfs: 2 resourceName: '{{hub fromConfigMap "" "site-data-configmap" (printf "%s-resourcename2" .ManagedClusterName) hub}}' EOFBy leveraging hub site templating we are reducing the number of policies on our hub cluster. This makes the clusters’s deployment and maintenance process a lot less cumbersome. Notice that with large fleets of clusters, it can quickly become hard to maintain per-site configurations. -

We’re using policy templating, so we have to create a

ConfigMapwith the templating values to be used. Observe that the following manifest contains SR-IOV information for each cluster deployed by our Hub-1. This network information is required to configure the SR-IOV network devices and provide SR-IOV capabilities to the Pods deployed in our SNO clusters. In the previous policy, it is captured the name of the SR-IOV interfaces (PFs) recognized on the host node to present additional virtual functions (VFs) on your OpenShift cluster. Those values are retrieved by obtaining the name of the cluster managed by the hub. The information is not obtained from a label set in theClusterInstancedefinition, it is theManagedClusterName, e.g., the name of the cluster used instead.

cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/sites/hub-1/site-data-hw-1-configmap.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: site-data-configmap

namespace: ztp-policies

annotations:

argocd.argoproj.io/sync-options: Replace=true

data:

# SR-IOV configuration

sno-seed-resourcename1: "igb-enp4s0"

sno-seed-resourcename2: "igb-enp5s0"

sno-seed-sriovnic1: "enp4s0"

sno-seed-sriovnic2: "enp5s0"

sno-abi-resourcename1: "virt-enp4s0"

sno-abi-resourcename2: "virt-enp5s0"

sno-abi-sriovnic1: "enp4s0"

sno-abi-sriovnic2: "enp5s0"

sno-ibi-resourcename1: "virt-enp4s0"

sno-ibi-resourcename2: "virt-enp5s0"

sno-ibi-sriovnic1: "enp4s0"

sno-ibi-sriovnic2: "enp5s0"

EOFMore information about Template processing can be found in Red Hat Advanced Cluster Management documentation.

Adding Custom Content (source-crs)

If you require cluster configuration changes outside of the base GitOps Zero Touch Provisioning (ZTP) pipeline configuration, you can add content to the GitOps ZTP library. The base source custom resources (CRs) that are included in the ztp-generate container that you deploy with the GitOps ZTP pipeline can be augmented with custom content as required.

| We recommend a directory structure to keep reference manifests corresponding to the y-stream release. |

In this lab we do not need extra content, however we are going to pave the way for future requirements. Let’s first extract the predefined source-crs from the ztp-generate container 4.18 version to the reference-crs folder.

podman login infra.5g-deployment.lab:8443 -u admin -p r3dh4t1! --tls-verify=false

podman run --log-driver=none --rm --tls-verify=false infra.5g-deployment.lab:8443/openshift4/ztp-site-generate-rhel8:v4.18.0-39 extract /home/ztp/source-crs --tar | tar x -C ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/source-crs/reference-crs/Notice that we can add content not included in the ZTP container image by creating the required source CR in the source-crs/custom-crs folder. Once pushed to our Git repo, we can reference it in any of the common, group or site PolicyGenTemplates. More information on deploying additional changes to cluster can be found in the docs.

Crafting Testing Policies

Testing policies before applying them to our production clusters is a must, in order to do that we will create a set of testing policies (which usually will be very similar to the production ones) and these policies will target clusters labeled with logicalGroup: "testing". We won’t go over every file, if you check the files that will be created those are the same as in active, also known as production, but with different names and binding policies.

-

Create the

commontesting PolicyGenTemplate for v4.18 SNOs:cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/testing/common-418.yaml --- apiVersion: ran.openshift.io/v1 kind: PolicyGenTemplate metadata: name: "common-test" namespace: "ztp-policies" spec: bindingRules: common: "ocp418" logicalGroup: "testing" mcp: master remediationAction: inform sourceFiles: - fileName: DefaultCatsrc.yaml metadata: name: redhat-operator-index spec: image: infra.5g-deployment.lab:8443/redhat/redhat-operator-index:v4.18-1742933492 policyName: config-policy - fileName: ReduceMonitoringFootprint.yaml policyName: config-policy - fileName: StorageLVMSubscriptionNS.yaml metadata: annotations: workload.openshift.io/allowed: management policyName: subscriptions-policy - fileName: StorageLVMSubscriptionOperGroup.yaml policyName: subscriptions-policy - fileName: StorageLVMSubscription.yaml spec: channel: stable-4.18 source: redhat-operator-index policyName: subscriptions-policy - fileName: LVMOperatorStatus.yaml policyName: subscriptions-policy - fileName: SriovSubscriptionNS.yaml policyName: "subscriptions-policy" - fileName: SriovSubscriptionOperGroup.yaml policyName: "subscriptions-policy" - fileName: SriovSubscription.yaml spec: channel: stable source: redhat-operator-index config: env: - name: "DEV_MODE" value: "TRUE" policyName: "subscriptions-policy" - fileName: SriovOperatorStatus.yaml policyName: subscriptions-policy EOF -

Create the

grouptesting PolicyGenTemplate for SNOs:cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/testing/group-du-sno.yaml --- apiVersion: ran.openshift.io/v1 kind: PolicyGenTemplate metadata: name: "du-sno-test" namespace: "ztp-policies" spec: bindingRules: group-du-sno: "" logicalGroup: "testing" hardware-type: "hw-type-platform-1" mcp: master remediationAction: inform sourceFiles: - fileName: DisableSnoNetworkDiag.yaml policyName: "group-policy" - fileName: DisableOLMPprof.yaml # wave 10 policyName: "group-policy" - fileName: SriovOperatorConfig.yaml policyName: "group-policy" spec: disableDrain: true enableOperatorWebhook: '{{hub fromConfigMap "" "group-hardware-types-configmap-test" (printf "%s-supported-sriov-nic" (index .ManagedClusterLabels "hardware-type")) | toBool hub}}' - fileName: StorageLVMCluster.yaml spec: storage: deviceClasses: - name: vg1 thinPoolConfig: name: thin-pool-1 sizePercent: 90 overprovisionRatio: 10 deviceSelector: paths: - '{{hub fromConfigMap "" "group-hardware-types-configmap-test" (printf "%s-storage-path" (index .ManagedClusterLabels "hardware-type")) hub}}' policyName: "group-policy" - fileName: PerformanceProfile.yaml policyName: "group-policy" metadata: annotations: kubeletconfig.experimental: | {"topologyManagerScope": "pod", "systemReserved": {"memory": "3Gi"} } spec: cpu: isolated: '{{hub fromConfigMap "" "group-hardware-types-configmap-test" (printf "%s-cpu-isolated" (index .ManagedClusterLabels "hardware-type")) hub}}' reserved: '{{hub fromConfigMap "" "group-hardware-types-configmap-test" (printf "%s-cpu-reserved" (index .ManagedClusterLabels "hardware-type")) hub}}' hugepages: defaultHugepagesSize: '{{hub fromConfigMap "" "group-hardware-types-configmap-test" (printf "%s-hugepages-default" (index .ManagedClusterLabels "hardware-type"))| hub}}' pages: - count: '{{hub fromConfigMap "" "group-hardware-types-configmap-test" (printf "%s-hugepages-count" (index .ManagedClusterLabels "hardware-type")) | toInt hub}}' size: '{{hub fromConfigMap "" "group-hardware-types-configmap-test" (printf "%s-hugepages-size" (index .ManagedClusterLabels "hardware-type")) hub}}' numa: topologyPolicy: single-numa-node realTimeKernel: enabled: false globallyDisableIrqLoadBalancing: false # WorkloadHints defines the set of upper level flags for different type of workloads. # The configuration below is set for a low latency, performance mode. workloadHints: realTime: true highPowerConsumption: false perPodPowerManagement: false - fileName: TunedPerformancePatch.yaml policyName: "group-policy" spec: profile: - name: performance-patch data: | [main] summary=Configuration changes profile inherited from performance created tuned include=openshift-node-performance-openshift-node-performance-profile [sysctl] # When using the standard (non-realtime) kernel, remove the kernel.timer_migration override from the [sysctl] section # kernel.timer_migration=0 [scheduler] group.ice-ptp=0:f:10:*:ice-ptp.* group.ice-gnss=0:f:10:*:ice-gnss.* [service] service.stalld=start,enable service.chronyd=stop,disable EOF cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/testing/group-hardware-types-configmap-test.yaml --- apiVersion: v1 kind: ConfigMap metadata: name: group-hardware-types-configmap-test namespace: ztp-policies annotations: argocd.argoproj.io/sync-options: Replace=true data: # PerformanceProfile.yaml hw-type-platform-1-cpu-isolated: "4-11" hw-type-platform-1-cpu-reserved: "0-3" hw-type-platform-1-hugepages-default: "1G" hw-type-platform-1-hugepages-count: "4" hw-type-platform-1-hugepages-size: "1G" hw-type-platform-1-supported-sriov-nic: "false" hw-type-platform-1-storage-path: "/dev/vdb" hw-type-platform-2-cpu-isolated: "2-39,42-79" hw-type-platform-2-cpu-reserved: "0-1,40-41" hw-type-platform-2-hugepages-default: "256M" hw-type-platform-2-hugepages-count: "16" hw-type-platform-2-hugepages-size: "1G" hw-type-platform-2-supported-sriov-nic: "true" hw-type-platform-2-storage-path: "/dev/nvme0n1" EOF cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/testing/group-du-sno-validator.yaml --- apiVersion: ran.openshift.io/v1 kind: PolicyGenTemplate metadata: name: "du-sno-validator-test" namespace: "ztp-policies" spec: bindingRules: group-du-sno: "" logicalGroup: "testing" bindingExcludedRules: ztp-done: "" mcp: "master" sourceFiles: - fileName: validatorCRs/informDuValidator.yaml remediationAction: inform policyName: "validation" EOF

At this point, policies are the same as in production (active). In the future, you prior want to apply these groups of testing policies for the clusters running in your test environment before promoting changes to production (active).

Configure Kustomization for Policies

We need to create the required kustomization files as we did for SiteConfigs. In this case, policies also require a namespace where they will be created. Therefore, we will create the required namespace and the kustomization files.

-

Policies need to live in a namespace, let’s add it to the repo:

cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/policies-namespace.yaml --- apiVersion: v1 kind: Namespace metadata: name: ztp-policies labels: name: ztp-policies EOF -

Create the required Kustomization files

You can see that we commented the group-du-sno-validator.yamlfiles in our Kustomization files. Since this is a lab environment and we don’t have PTP/SR-IOV hardware, the validator policies won’t be able to verify our SNOs as a well-configured DU. We kept the files here so you know how a real environment should be configured.cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/kustomization.yaml --- apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization resources: - fleet/ - sites/ - policies-namespace.yaml EOF cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/sites/kustomization.yaml --- apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization bases: - hub-1/ EOF cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/sites/hub-1/kustomization.yaml --- apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization generators: - sites-specific.yaml resources: - site-data-hw-1-configmap.yaml EOF cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/kustomization.yaml --- apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization resources: - active/ - testing/ EOF cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/kustomization.yaml --- apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization generators: - common-418.yaml - group-du-sno.yaml # - group-du-sno-validator.yaml resources: - group-hardware-types-configmap.yaml EOF cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/testing/kustomization.yaml --- apiVersion: kustomize.config.k8s.io/v1beta1 kind: Kustomization generators: - common-418.yaml - group-du-sno.yaml # - group-du-sno-validator.yaml resources: - group-hardware-types-configmap-test.yaml EOF -

At this point, we can push the changes to the repo.

cd ~/5g-deployment-lab/ztp-repository git add --all git commit -m 'Added policies information' git push origin main cd ~

Deploying the Telco 5G RAN RDS using the ZTP GitOps Pipeline

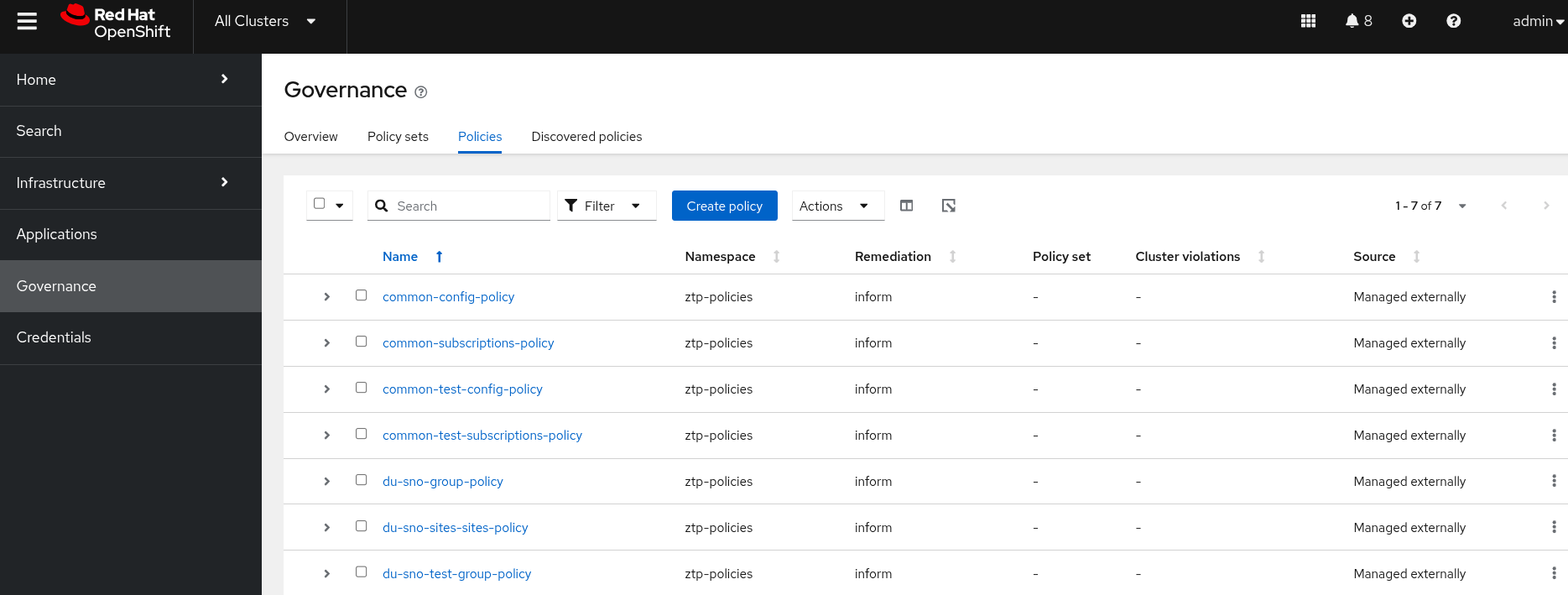

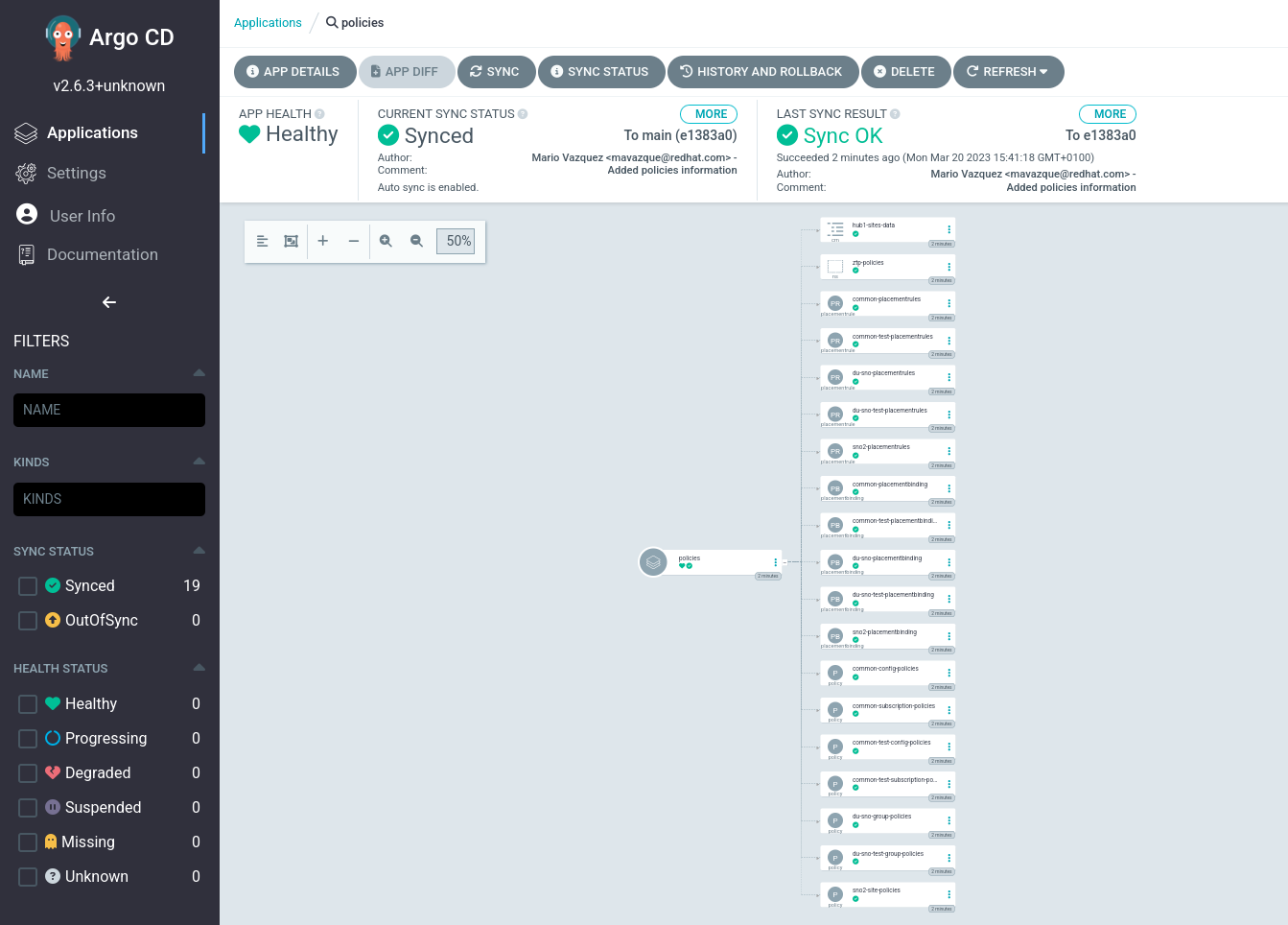

Once the changes are commited to the Git repository, ArgoCD will synchronize the new resources and apply them to the hub cluster automatically. You can see how the policies ZTP GitOps pipeline created all the configuration objects. If we check the policies app this is what we see:

The resulting policies can be seen from the RHACM Console at https://console-openshift-console.apps.hub.5g-deployment.lab/multicloud/home/welcome. You can also access through the pre-configured Firefox  bookmark.

bookmark.

| It may take up to 5 minutes for the policies to show up, this is due to Argo CD syncing period. |

-

Access the RHACM console and login with the OpenShift credentials.

-

Once you’re in, click on

Governance→Policies. You will see the following screen where we can see several policies registered.

| The Telco 5G RAN RDS policies are ready but they are not being applied or compared to any cluster yet. That’s because we did not provision any SNO cluster. Let’s fix that by moving to the next section and deploying the first one. |