Preparing the ZTP GitOps Pipeline

In RAN environments we will be managing thousands of Single Node OpenShift (SNO) instances, and as such, a scalable and manageable way of defining our infrastructure is required.

By describing our infrastructure as code (IaC) the Git repository holds declarative state of the fleet.

Introduction to the ClusterInstance

The ClusterInstance is an abstraction layer on top of the different components managed by the SiteConfig Operator, responsible for deploying OpenShift clusters using either the Agent Based Installation or Image Based Installation flow. For the ones familiar with the Agent Based Installation and particularly with the Assisted Service, you will know that in order to deploy a cluster using this service there are several Kubernetes objects that need to be created like: ClusterDeployment, InfraEnv, AgentClusterInstall, etc.

The ClusterInstance is fundamental for scaling the management of SNO fleets, as it abstracts the complexity of multiple Kubernetes objects into a unified and simplified definition for cluster deployment. It simplifies this process by providing a unified structure to describe the cluster deployment configuration in a single place. In this link you can find an example of a ClusterInstance for a SNO deployment.

In the comming sections, we will create our own ClusterInstances using the Image Based Installation and Agent Based Installation flows to deploy a couple of SNO clusters in our lab environment.

| The steps below rely on the lab environment being up and running. |

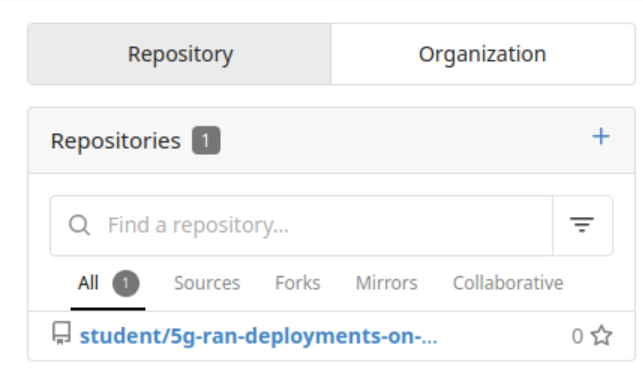

Git Repository

We need a Git repository where we will store our clusters configurations, we will create a new Git repository in the Git server running on the infrastructure node.

Remember you can access the git server through the pre-configured Firefox  bookmark. bookmark.

|

-

Login into the Git server (user: student, password: student).

-

You will see that one Git repository already exist, you must not change this repository, instead you will create a new one.

-

Click on the "+" next to

Repositories. -

Use

ztp-repositoryasRepository Nameand click onCreate Repository. -

You will get redirected to the new repository page.

Now that we have a repository ready to be used we will clone it to the infrastructure host.

Remember you have a terminal ready at the Terminal 1 tab.

|

mkdir -p ~/5g-deployment-lab/

git clone http://student:student@infra.5g-deployment.lab:3000/student/ztp-repository.git ~/5g-deployment-lab/ztp-repository/We’re ready to start working in the Git repository folder structure. The following well-defined repository structure is essential for the efficiency of GitOps and ZTP, enabling a logical separation of cluster configurations (site-configs) and configuration policies (site-policies), which facilitates automated management at scale.

As we saw in Git Repository Structure section, the Git repository we will be using will have the following structure:

├── site-configs

│ ├── hub-1

│ │ ├── pre-reqs

│ │ │ └── sno-abi

| │ | └── sno-ibi

| | └── extra-manifest

| | └── reference-manifest

| | | └── 4.19

│ ├── pre-reqs

│ │ └── sno-abi

| | └── sno-ibi

│ └── resources

└── site-policies

├── fleet

│ ├── active

| │ └── v4.19

| | │ └── source-crs

| | | | └── reference-crs

| | | | └── custom-crs

| │ └── v4.18

| | │ └── source-crs

| | | | └── reference-crs

| | | | └── custom-crs

│ └── testing

| | │ └── v4.20

| | | │ └── source-crs

| | | | | └── reference-crs

| | | | | └── custom-crsLet’s replicate it:

If it is the first time that you are using git in your machine, a message requiring you to setup a Git Username and Email may be shown.

|

| The cluster name for the SNO that we will be deploying using the agent-based install will be sno-abi. On the other hand, sno-ibi is the name of the cluster that we will deploy using the image-based install flow. That’s why those folder exists in the repository structure that we are creating. |

cd ~/5g-deployment-lab/ztp-repository/

mkdir -p site-configs/{hub-1,resources,pre-reqs/sno-abi,pre-reqs/sno-ibi,hub-1/extra-manifest,reference-manifest/4.19}

mkdir -p site-policies/{fleet/active/v4.19/source-crs/reference-crs,fleet/active/v4.19/source-crs/custom-crs,fleet/active/v4.18/source-crs/reference-crs,fleet/active/v4.18/source-crs/custom-crs}

mkdir -p site-policies/{fleet/testing/v4.20/source-crs/reference-crs,fleet/testing/v4.20/source-crs/custom-crs}

touch site-configs/{hub-1,resources,pre-reqs/sno-abi,pre-reqs/sno-ibi,hub-1/extra-manifest,reference-manifest/4.19}/.gitkeep

touch site-policies/{fleet,fleet/active,fleet/testing,fleet/active/v4.19,fleet/active/v4.18,fleet/testing/v4.20,fleet/active/v4.19/source-crs/reference-crs,fleet/active/v4.19/source-crs/custom-crs,fleet/active/v4.18/source-crs/reference-crs,fleet/active/v4.18/source-crs/custom-crs,fleet/testing/v4.20/source-crs/reference-crs,fleet/testing/v4.20/source-crs/custom-crs}/.gitkeep

git add --all

git commit -m 'Initialized repo structure'

git push origin mainDeploying the ZTP GitOps Pipeline

As we saw in previous sections, clusters are deployed using the ZTP GitOps Pipeline, but before starting we need to load it into our hub cluster.

We already have created the Git repository that will be used for storing our Infrastructure as Code (IaC) and Configuration as Code (CaC). Next step is deploying the ZTP GitOps Pipeline, let’s do it.

| Below commands must be executed from the infrastucture host if not specified otherwise. |

Before continuing, make sure you have the following tooling installed in your workstation:

In the infrastructure server, run the following command to extract the pipeline installation files:

mkdir -p ~/5g-deployment-lab/ztp-pipeline/argocd

podman login infra.5g-deployment.lab:8443 -u admin -p r3dh4t1! --tls-verify=false

podman run --log-driver=none --rm --tls-verify=false infra.5g-deployment.lab:8443/openshift4/ztp-site-generate-rhel8:v4.19.0-1 extract /home/ztp/argocd --tar | tar x -C ~/5g-deployment-lab/ztp-pipeline/argocd/Now that we extracted the pipeline content we need to get it applied to our hub cluster:

-

Login into the hub cluster.

The command below must be changed to use the OpenShift admin password provided in the e-mail you received when the lab was ready. oc --kubeconfig ~/hub-kubeconfig login -u admin -p admin_password_here https://api.hub.5g-deployment.lab:6443 --insecure-skip-tls-verify=true -

Modify the ZTP GitOps Pipeline configuration to match our environment configuration.

-

Change the repository url for the two ArgoApps:

If you’re using MacOS and you’re getting errors while running sed -icommands, make sure you are usinggsed. If you do not have it available, please install it:brew install gnu-sed.sed -i "s|repoURL: .*|repoURL: http://infra.5g-deployment.lab:3000/student/ztp-repository.git|" ~/5g-deployment-lab/ztp-pipeline/argocd/deployment/clusters-app.yaml sed -i "s|repoURL: .*|repoURL: http://infra.5g-deployment.lab:3000/student/ztp-repository.git|" ~/5g-deployment-lab/ztp-pipeline/argocd/deployment/policies-app.yaml -

Change the repository path for the two ArgoApps:

sed -i "s|path: .*|path: site-configs|" ~/5g-deployment-lab/ztp-pipeline/argocd/deployment/clusters-app.yaml sed -i "s|path: .*|path: site-policies|" ~/5g-deployment-lab/ztp-pipeline/argocd/deployment/policies-app.yaml -

Change the repository branch for the two ArgoApps:

sed -i "s|targetRevision: .*|targetRevision: main|" ~/5g-deployment-lab/ztp-pipeline/argocd/deployment/clusters-app.yaml sed -i "s|targetRevision: .*|targetRevision: main|" ~/5g-deployment-lab/ztp-pipeline/argocd/deployment/policies-app.yaml

-

-

Include

openshift-adpnamespace in the list of namespaces managed by the GitOps operator to ensure that policies related to OpenShift Data Protection (such as backup and restore operations) are also managed by the GitOps pipeline.sed -i "/destinations:/a \ \ - namespace: \'openshift-adp\'\n \ \ \ server: '\*\'" ~/5g-deployment-lab/ztp-pipeline/argocd/deployment/policies-app-project.yaml -

Apply the ZTP GitOps Pipeline configuration:

oc --kubeconfig ~/hub-kubeconfig apply -k ~/5g-deployment-lab/ztp-pipeline/argocd/deployment/At this stage, we have our ZTP GitOps pipeline running and waiting for committing declarative Infrastructure and Configuration as Code to the

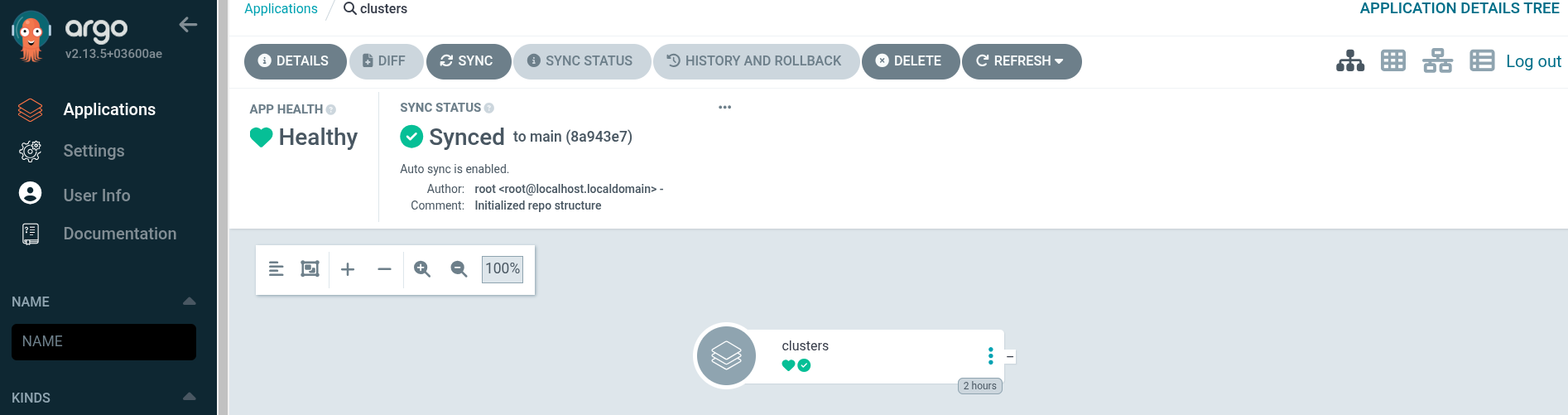

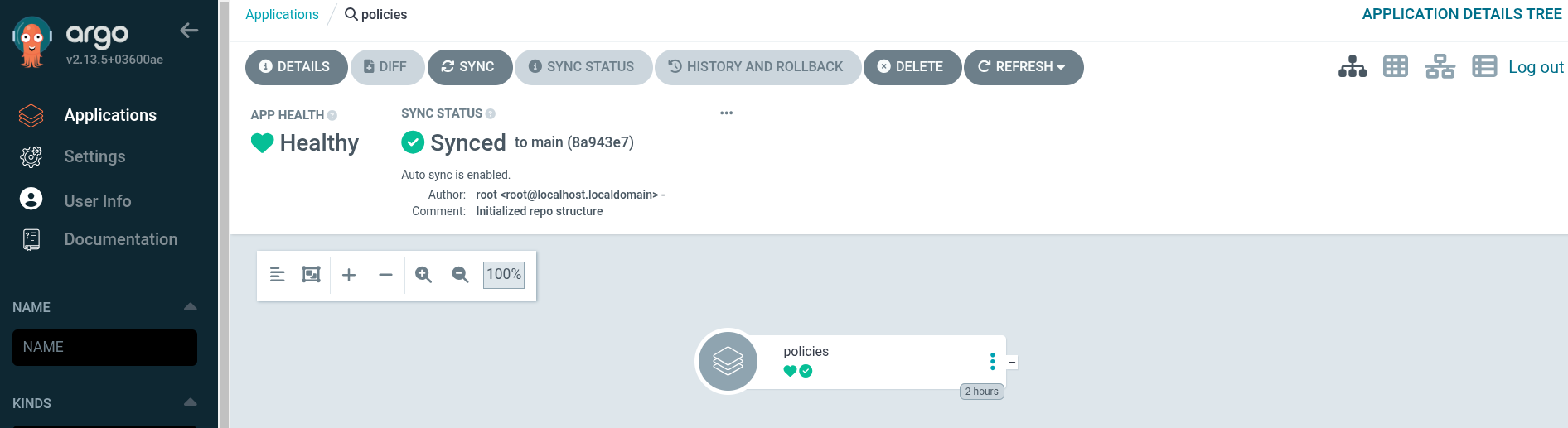

ztp-repositoryGit repository.-

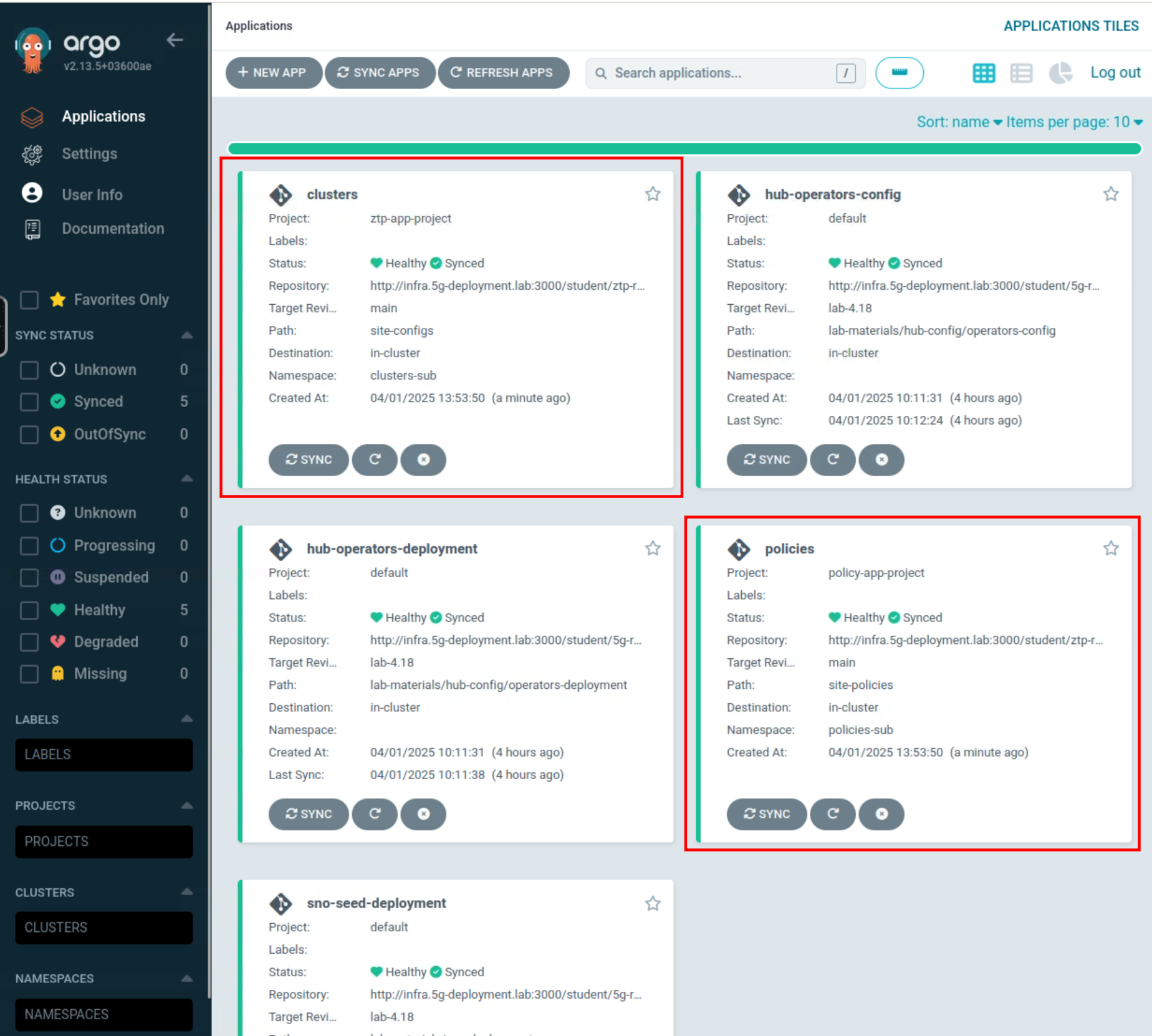

clusters. It is responsible for the infrastructure and physical deployment of Single Node OpenShift clusters from the Git repository.

-

policies. It focuses on the logical and operational configuration of those clusters by applying the Telco RAN DU reference configuration via RHACM policies.

-

To confirm that the Argo CD applications for clusters and policies are present and healthy, access the Argo CD instance on the lab at https://openshift-gitops-server-openshift-gitops.apps.hub.5g-deployment.lab and login in with the OpenShift admin credentials. You can also access through the pre-configured Firefox  bookmark. Another option is running:

bookmark. Another option is running:

You should see the following ArgoCD Apps running:

Notice that the clusters and policies Argo CD applications are empty. This is because we did not yet commit any configuration to the ztp-repository Git repo. This is going to change in the next section.

|