Image-based upgrade for Single-node OpenShift Clusters

Introduction to Image-based Upgrades

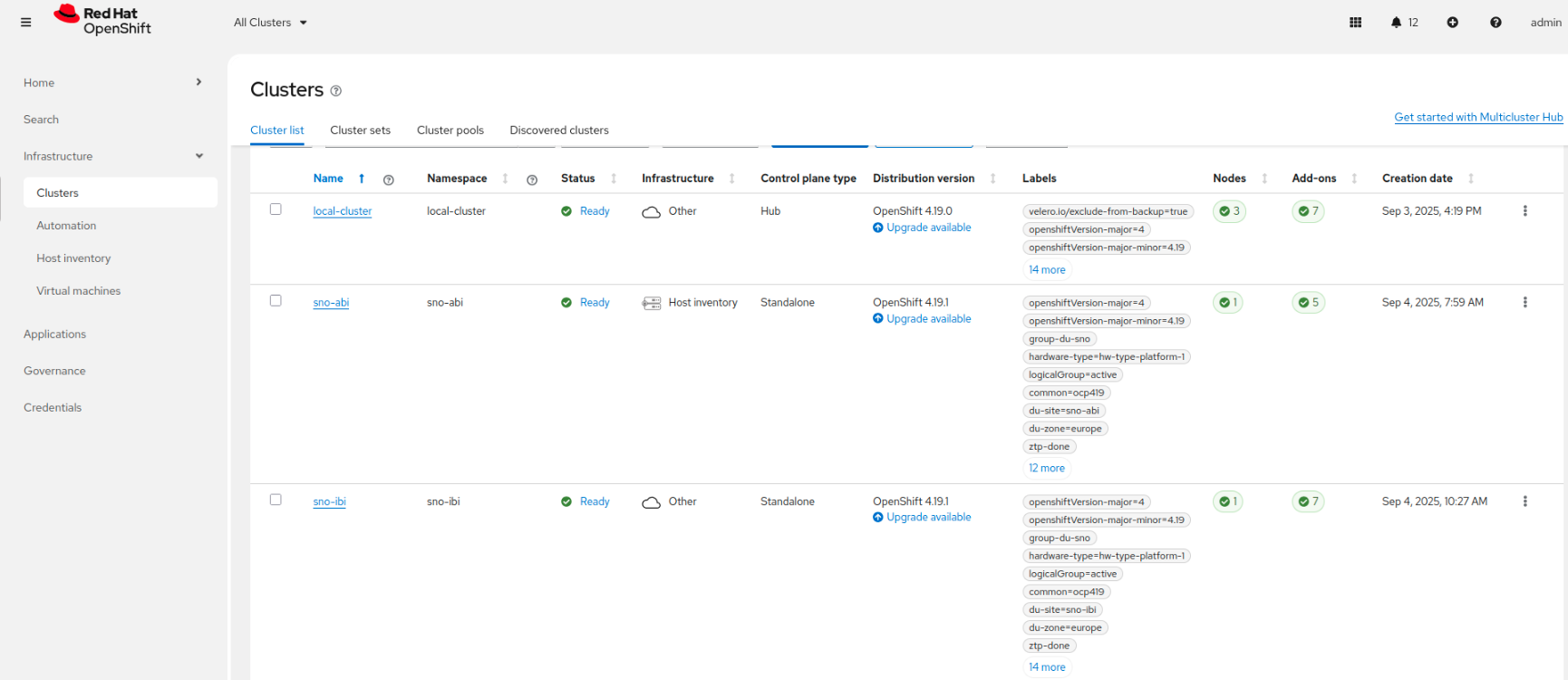

In this section, we will perform an image-based upgrade to both managed clusters (sno-abi and sno-ibi) by leveraging the Lifecycle Agent (LCA) and the Topology Aware Lifecycle Manager (TALM) operators. The LCA provides an alternative way to upgrade the platform version of a single-node OpenShift cluster, while TALM manages the rollout of configurations throughout the lifecycle of the cluster fleet.

The image-based upgrade is faster than the standard upgrade method and allows direct upgrades from OpenShift Container Platform <4.y> to <4.y+2>, and <4.y.z> to <4.y.z+n>. These upgrades utilize a generated OCI image from a dedicated seed cluster. We have already provided a seed image with version 4.19.1. The process to generate this seed image is the same as the one we executed in Creating the Seed Image for version 4.19.0 to install sno-ibi.

| Image-based upgrades rely on custom images specific to the hardware platform on which the clusters are running. Each distinct hardware platform requires its own seed image. You can find more information here. |

Below are the steps to upgrade both managed clusters and their configuration from 4.19.0 to 4.19.1:

-

Create a seed image using the Lifecycle Agent. As mentioned, the seed image

infra.5g-deployment.lab:8443/rhsysdeseng/lab5gran:v4.19.1is already available in the container registry. -

Verify that all software components meet the required versions. You can find the minimum software requirements here.

-

Install the Lifecycle Agent operator and the OpenShift API for Data Protection (OADP) in the managed clusters.

-

Configure OADP to back up and restore the configuration of the managed clusters that isn’t included in the seed image.

-

Perform the upgrade using the

ImageBasedGroupUpgradeCR, which combines the ImageBasedUpgrade (LCA) and ClusterGroupUpgrade APIs (TALM).

Both clusters were installed using different methodologies. While sno-abi was provisioned using the agent-based install workflow, sno-ibi was deployed using the image-based install. However, as long as they both meet the Seed cluster guidelines (which ensure the base image is compatible with the installed hardware and software configuration on the target clusters) and have the same combination of hardware, Day 2 Operators, and cluster configuration as the 4.19.1 seed cluster, we’re ready to proceed.

|

First, let’s verify the software requirements in our hub cluster:

Verifying Hub Software Requirements

Let’s connect to the hub cluster and list all the installed operators:

oc --kubeconfig ~/hub-kubeconfig get operatorsNAME AGE

advanced-cluster-management.open-cluster-management 2d1h

cluster-logging.openshift-logging 2d1h

loki-operator.openshift-operators 2d1h

lvms-operator.openshift-storage 2d1h

multicluster-engine.multicluster-engine 2d1h

openshift-gitops-operator.openshift-operators 2d1h

ptp-operator.openshift-ptp 2d1h

topology-aware-lifecycle-manager.openshift-operators 2d1hVerify that the Image-Based Install (IBI) operator is installed:

oc --kubeconfig ~/hub-kubeconfig get pods -n multicluster-engine -lapp=image-based-install-operatorNAME READY STATUS RESTARTS AGE

image-based-install-operator-7f9587bbc-8fmg5 2/2 Running 0 2d1hNext, double-check that the TALM operator is running. Note that the name of the Pod is cluster-group-upgrade-controller-manager, which is based on the name of the upstream project Cluster Group Upgrade Operator.

oc --kubeconfig ~/hub-kubeconfig get pods -n openshift-operatorsNAME READY STATUS RESTARTS AGE

cluster-group-upgrades-controller-manager-v2-5df6886875-cvwj4 1/1 Running 3 (2d1h ago) 2d1h

loki-operator-controller-manager-576ff479bf-hjrfp 2/2 Running 0 2d1h

openshift-gitops-operator-controller-manager-57684fcdc9-9p5rm 2/2 Running 0 2d1hVerifying Managed Clusters Requirements

In the previous sections, we deployed the sno-abi cluster using the agent-based installation and the sno-ibi cluster using the image-based installation. Before starting the upgrade, we need to check if the Lifecycle Agent and OpenShift API for Data Protection operators are installed in the target clusters.

| We already know that both SNO clusters meet the seed cluster guidelines and have the same combination of hardware, Day 2 Operators, and cluster configuration as the target seed cluster from which the seed image version v4.19.1 was obtained. |

Let’s obtain the kubeconfigs for both clusters, as we will need them for the next sections:

oc --kubeconfig ~/hub-kubeconfig -n sno-abi extract secret/sno-abi-admin-kubeconfig --to=- > ~/abi-cluster-kubeconfig

oc --kubeconfig ~/hub-kubeconfig -n sno-ibi extract secret/sno-ibi-admin-kubeconfig --to=- > ~/ibi-cluster-kubeconfigIf we check the operators installed in sno-abi, we’ll notice that neither LCA nor OADP are installed. This is expected because, as we saw in the Crafting Common Policies section, only SR-IOV, PTP, Logging and LVM operators were installed as Day 2 Operators.

oc --kubeconfig ~/abi-cluster-kubeconfig get csv -ANAMESPACE NAME DISPLAY VERSION REPLACES PHASE

openshift-logging cluster-logging.v6.3.0 Red Hat OpenShift Logging 6.3.0 Succeeded

openshift-operator-lifecycle-manager packageserver Package Server 0.0.1-snapshot Succeeded

openshift-ptp ptp-operator.v4.19.0-202507180107 PTP Operator 4.19.0-202507180107 Succeeded

openshift-sriov-network-operator sriov-network-operator.v4.19.0-202507212206 SR-IOV Network Operator 4.19.0-202507212206 Succeeded

openshift-storage lvms-operator.v4.19.0 LVM Storage 4.19.0 SucceededOn the other hand, sno-ibi is running all the required operators: Day 2 Operators plus LCA and OADP, which are necessary to run the image-based upgrade process. This is because they were already included in the seed image version v4.19.0. See Running the Seed Image Generation for a list of the operators installed in the seed cluster. They are the same versions because sno-ibi was provisioned with that seed image (image-based installation).

oc --kubeconfig ~/ibi-cluster-kubeconfig get csv -ANAMESPACE NAME DISPLAY VERSION REPLACES PHASE

openshift-adp oadp-operator.v1.5.0 OADP Operator 1.5.0 Succeeded

openshift-lifecycle-agent lifecycle-agent.v4.19.0 Lifecycle Agent 4.19.0 Succeeded

openshift-logging cluster-logging.v6.3.0 Red Hat OpenShift Logging 6.3.0 Succeeded

openshift-operator-lifecycle-manager packageserver Package Server 0.0.1-snapshot Succeeded

openshift-ptp ptp-operator.v4.19.0-202507180107 PTP Operator 4.19.0-202507180107 Succeeded

openshift-sriov-network-operator sriov-network-operator.v4.19.0-202507212206 SR-IOV Network Operator 4.19.0-202507212206 Succeeded

openshift-storage lvms-operator.v4.19.0 LVM Storage 4.19.0 SucceededOkay, let’s install the missing LCA and OADP operators in sno-abi and configure them appropriately in both managed clusters. To achieve this, a PolicyGenerator and a cluster group upgrade (CGU) CR will be created in the hub cluster so that TALM manages the installation and configuration process.

Remember that an already completed CGU was applied to sno-abi. As mentioned in the inform policies section, not all policies are enforced automatically; the user has to create the appropriate CGU resource to enforce them. However, when using ZTP, we want our cluster provisioned and configured automatically. This is where TALM steps in, processing the set of created policies (inform) and enforcing them once the cluster has been successfully provisioned. Therefore, the configuration stage starts without any intervention, resulting in our OpenShift cluster being ready to process workloads.

You might encounter an UpgradeNotCompleted error. If that’s the case, you need to wait for the remaining policies to be applied. You can check the policies' status here.

|

oc --kubeconfig ~/hub-kubeconfig get cgu sno-abi -n ztp-installNAME AGE STATE DETAILS

sno-abi 7m26s Completed All clusters are compliant with all the managed policiesPreparing Managed Clusters for Upgrade

At this stage, we are going to fulfill the image-based upgrade prerequisites using a GitOps approach. This will allow us to easily scale from our two SNOs to a fleet of thousands of SNO clusters if needed. We will achieve this by:

-

Creating a

PolicyGeneratorto install LCA and OADP. This also configures OADP to back up and restore the ACM and LVM setup. -

Creating a CGU to enforce the policies on the target clusters.

-

Configuring the managed clusters with configurations that were not or could not be included in the seed image.

-

Running the image-based group upgrade (IBGU) process.

| An S3-compatible storage server is required for backup and restore operations. See the S3 Storage Server section. |

Let’s create a PolicyGenerator manifest called requirements-upgrade on the hub cluster. This will help us to create multiple RHACM policies, as explained in the PolicyGen DeepDive section.

| Due to an issue with the OADP Data Protection source CR because of a deprecated configuration in version 1.5 of OADP, a custom source CR must be created and referenced in the Policy. Below is shown the new source CR of OadpDataProtectionApplication.yaml stored as custom CR. |

cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/v4.19/source-crs/custom-crs/OadpDataProtectionApplication.yaml

---

# The configuration CR for OADP operator

apiVersion: oadp.openshift.io/v1alpha1

kind: DataProtectionApplication

metadata:

name: dataprotectionapplication

namespace: openshift-adp

annotations:

ran.openshift.io/ztp-deploy-wave: "100"

spec:

configuration:

nodeAgent:

enable: false

uploaderType: restic

velero:

defaultPlugins:

- aws

- openshift

resourceTimeout: 10m

status:

conditions:

- reason: Complete

status: "True"

type: Reconciled

EOFNext, let’s create a PolicyGenerator manifest to install and configure the LCA and OADP operators. It also configures OADP to back up and restore the ACM and LVM setup.

cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/v4.19/requirements-upgrade.yaml

---

apiVersion: policy.open-cluster-management.io/v1

kind: PolicyGenerator

metadata:

name: requirements-upgrade

placementBindingDefaults:

name: requirements-upgrade-placement-binding

policyDefaults:

namespace: ztp-policies

placement:

labelSelector:

common: "ocp419"

logicalGroup: "active"

du-zone: "europe"

remediationAction: inform

severity: low

namespaceSelector:

exclude:

- kube-*

include:

- '*'

evaluationInterval:

# This low setting is only valid if the validation policy is disconnected from the cluster at steady-state

# using a bindingExcludeRules entry with ztp-done

compliant: 5s

noncompliant: 10s

policies:

- name: requirements-upgrade-subscriptions-policy

policyAnnotations:

ran.openshift.io/soak-seconds: "30"

ran.openshift.io/ztp-deploy-wave: "10000"

manifests:

- path: source-crs/reference-crs/LcaSubscriptionOperGroup.yaml

patches:

- metadata:

name: lifecycle-agent-operatorgroup

- path: source-crs/reference-crs/LcaSubscription.yaml

patches:

- spec:

channel: stable

source: redhat-operator-index

- path: source-crs/reference-crs/LcaOperatorStatus.yaml

- path: source-crs/reference-crs/LcaSubscriptionNS.yaml

- path: source-crs/reference-crs/OadpSubscriptionOperGroup.yaml

- path: source-crs/reference-crs/OadpSubscription.yaml

patches:

- spec:

source: redhat-operator-index

- path: source-crs/reference-crs/OadpOperatorStatus.yaml

- path: source-crs/reference-crs/OadpSubscriptionNS.yaml

- name: requirements-upgrade-config-policy

policyAnnotations:

ran.openshift.io/soak-seconds: "30"

ran.openshift.io/ztp-deploy-wave: "20000"

manifests:

- path: source-crs/reference-crs/OadpSecret.yaml

patches:

- data:

cloud: W2RlZmF1bHRdCmF3c19hY2Nlc3Nfa2V5X2lkPWFkbWluCmF3c19zZWNyZXRfYWNjZXNzX2tleT1hZG1pbjEyMzQK

- path: source-crs/custom-crs/OadpDataProtectionApplication.yaml

patches:

- spec:

backupLocations:

- velero:

config:

insecureSkipTLSVerify: "true"

profile: default

region: minio

s3ForcePathStyle: "true"

s3Url: http://192.168.125.1:9002

credential:

key: cloud

name: cloud-credentials

default: true

objectStorage:

bucket: '{{hub .ManagedClusterName hub}}'

prefix: velero

provider: aws

- path: source-crs/reference-crs/OadpBackupStorageLocationStatus.yaml

- name: requirements-upgrade-extra-manifests

policyAnnotations:

ran.openshift.io/soak-seconds: "30"

ran.openshift.io/ztp-deploy-wave: "30000"

manifests:

- path: source-crs/reference-crs/ConfigMapGeneric.yaml

patches:

- metadata:

name: disconnected-ran-config

namespace: openshift-lifecycle-agent

EOFCreate the openshift-adp namespace on the hub cluster. This is required so that the backup and restore configurations will automatically propagate to the target clusters.

cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/v4.19/ns-oadp.yaml

---

apiVersion: v1

kind: Namespace

metadata:

creationTimestamp: null

name: openshift-adp

spec: {}

status: {}

EOFInclude the configuration of a disconnected catalog source and cluster logging as extra manifests. Remember that there some components such as the catalog source or the cluster logging certificates that are excluded of the seed image creation.

cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/v4.19/extra-manifests.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: disconnected-ran-config

namespace: ztp-policies

data:

redhat-operator-index.yaml: |

apiVersion: operators.coreos.com/v1alpha1

kind: CatalogSource

metadata:

annotations:

target.workload.openshift.io/management: '{"effect": "PreferredDuringScheduling"}'

ran.openshift.io/ztp-deploy-wave: "1"

name: redhat-operator-index

namespace: openshift-marketplace

spec:

displayName: default-cat-source

image: infra.5g-deployment.lab:8443/redhat/redhat-operator-index:v4.19-1754067857

publisher: Red Hat

sourceType: grpc

updateStrategy:

registryPoll:

interval: 1h

clusterlogforwarder.yaml: |

apiVersion: observability.openshift.io/v1

kind: ClusterLogForwarder

metadata:

annotations:

ran.openshift.io/ztp-deploy-wave: "10"

name: instance

namespace: openshift-logging

spec:

filters:

- name: ran-du-labels

openshiftLabels:

cluster-type: du-sno

type: openshiftLabels

managementState: Managed

outputs:

- loki:

labelKeys:

- log_type

- kubernetes.namespace_name

- kubernetes.pod_name

- openshift.cluster_id

url: https://logging-loki-openshift-logging.apps.hub.5g-deployment.lab/api/logs/v1/tenant-snos

name: loki-hub

tls:

ca:

key: ca-bundle.crt

secretName: mtls-tenant-snos

certificate:

key: tls.crt

secretName: mtls-tenant-snos

insecureSkipVerify: true

key:

key: tls.key

secretName: mtls-tenant-snos

type: loki

pipelines:

- filterRefs:

- ran-du-labels

inputRefs:

- infrastructure

- audit

name: sno-logs

outputRefs:

- loki-hub

serviceAccount:

name: collector

mtls-tenant-snos.yaml: |

apiVersion: v1

kind: Secret

metadata:

name: mtls-tenant-snos

namespace: openshift-logging

annotations:

ran.openshift.io/ztp-deploy-wave: "10"

type: Opaque

data:

ca-bundle.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUZtVENDQTRHZ0F3SUJBZ0lVUkZBeUF0citIUVQrZnZlNStSY3VONEQ3bEtzd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1d6RUxNQWtHQTFVRUJoTUNSVk14R2pBWUJnTlZCQW9NRVd4dloyZHBibWN0YjJOdExXRmtaRzl1TVJvdwpHQVlEVlFRTERCRnNiMmRuYVc1bkxXOWpiUzFoWkdSdmJqRVVNQklHQTFVRUF3d0xkR1Z1WVc1MExYTnViM013CklCY05NalV3TmpBME1Ea3pOVEkwV2hnUE1qQTFNakV3TVRrd09UTTFNalJhTUZzeEN6QUpCZ05WQkFZVEFrVlQKTVJvd0dBWURWUVFLREJGc2IyZG5hVzVuTFc5amJTMWhaR1J2YmpFYU1CZ0dBMVVFQ3d3UmJHOW5aMmx1WnkxdgpZMjB0WVdSa2IyNHhGREFTQmdOVkJBTU1DM1JsYm1GdWRDMXpibTl6TUlJQ0lqQU5CZ2txaGtpRzl3MEJBUUVGCkFBT0NBZzhBTUlJQ0NnS0NBZ0VBd2R0OWZTcm02RitmVlhESENKUTJKVFJZdTFSa0h0eWhtMG93VnlCSDcySDAKQ3Y3eGlOVm5VYVpXcmczZVhneXVwR1BJVEpYNzJQNGV1U0JUa0lnOGRYSU9wb0piSUg5L3AvbW1wb3ByZjJ4bgp3eFJRLzNReGYra20vZmo5V3dPelkxaWNPQzZWM0hRSjBoU3JYMFRsRFRzRnVUTkNmeE1KR1Jldk5ZQmNNSGZyClRqTEhtRGtNVys5dlZvcjl4OUxkeGN0WFc5c1dVMU84MzhKT2hSMWlHbWZqMHhxN1RlWXFBR3dhSDFhaDN2c0oKcU93UmlYdTV1RWtxN3JFMkRkcGR3aURHU1pUMFZUbDJCUjRXcnQrZUlxcTdYbzdBdDI0Q2ZVOGtzcVlDT1dyRQowK0NjR0FrZUhqajBBSHFHRys5VW5LNzhnTjYwOWM4S2QzYmthOE5uaXZTTWsvRHVZODVBSENlUHNiTlpXT1NBCnZwSEJnYUVrZ0NGMGFiODZ2NGpMNXVoMm1WdVB2aWFxRE1uV1FzcGRSUE1DUVhQTDd4bDZYUTRtSnNmV1haeVgKV3M5SzJRbWVRNTNxbkl6T3JuYldnYnNwTW9QUWxYbktwa3AydzI3cm9UYXBCK0VVVXY0RzUwSXZIZU1tQy9UKwo4b25qdEU5Tk1obW1lVS81Y1MvQVVtVHZWZGk1NTE0cE5zMnl2UnZyZkozQjZTZlF1KzBEZXdKN3ZoT3FSUzA0Cjk5QlZkNWpSOVduaytTSEZFTjZqU2Jxa2lvNmRjSHBZN1E4TXpNRU1WbkxHUTQ3TWtWaTVkelRDcWszakVtak4KWFFHU3o1MCswS2s3eERzWG9za0c0WmNiUGxpa0Y1ZjBLTDd0dHlQZTdvUkg1M0RTdUtCeVNTOHpQb2k3b2NrQwpBd0VBQWFOVE1GRXdIUVlEVlIwT0JCWUVGQkYvSWhzUFozd0pHa01jNjdnK2dWUHZ0YUtlTUI4R0ExVWRJd1FZCk1CYUFGQkYvSWhzUFozd0pHa01jNjdnK2dWUHZ0YUtlTUE4R0ExVWRFd0VCL3dRRk1BTUJBZjh3RFFZSktvWkkKaHZjTkFRRUxCUUFEZ2dJQkFMYW9GVjQ0bTRmeEkxRExGcjNmQXE4bzBycFE3OVdWRVArVTYwZTNBV0x1R1BQLwpKeTlESlFCdzZuSEtqaDFXU0srWG85K2F0ci9qQjJ0Tk9hZEE4blRCZTZ1dk92RnNnTDU1R3hhYmVrMWNvVlJ2Cm15VTBma096cXFkVWRJejNrMkpzZGNONjZJYTZYdml3RVBuY3VyNHhnNnJhdUd1VDYrOTREK09ZdldtbHM1MGgKUE5mcDhNalBoUEJqL3UyMVBFdWxHV2l1bnhpeHdITzBJTWJzeHRFcU5jaXpZZkdkRnJjT0hsYzdHTVIxM0xhagpqMVZqaGkxZlJUSllDMkh1ZlFUUkJVNTlxNVVNQ0p2OWg0REw3UFh4ZFUvcFU2UWNTcUNqaGRXMjNoNXYzakZmCkQyWnN0US9jWFVkWXVZd0FMNElyVWFwekZqNU8xdVJTMHU4V2k0cnhWYlY2SUdrQm14b1JqWVovQkRCNTh6MEwKRElZRjhORFMvM25MY0sxVzNVNVdXeUVMd01MZVRuQTh0YmloVVJEcG4zN2hDR3JkU1QvbFpSTmt0czBzS0dUVQpVQktoMzM2M2tkY1RzNm45V3Z4TzJjMG1kY3JIMG9EL0pzcEpKM1oweGZLaVlMR0tINFk4WXdhWE8rTGMxZDdBClQ3UjdWcTVwKzdtWVZCVXFWenVaR0NvdmVkckI1UGc0ZVVCdVRLZ3E1OHFtTlVEM3IxS0hOeHhoVU54T1hkdzAKemI5RWNHR0JDaG9paExDOEk2QU8xODFmdENzUm02MnV3eGRKQjBIQWtXN290Z0hOdmFlTHhQbmkxMmhLZ1pQbgpUNjhxeU9HUGVVUEh3NURZQ3pSOE5ZTm9ma1M4OUwwYTlvL2lrYVRqczNicXMrNTB6QnFYYlFHU0NWanEKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

tls.crt: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUY5VENDQTkyZ0F3SUJBZ0lVTndKS2xlWk1LcnNBQWoxT1BWODdpblRqd25Vd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1d6RUxNQWtHQTFVRUJoTUNSVk14R2pBWUJnTlZCQW9NRVd4dloyZHBibWN0YjJOdExXRmtaRzl1TVJvdwpHQVlEVlFRTERCRnNiMmRuYVc1bkxXOWpiUzFoWkdSdmJqRVVNQklHQTFVRUF3d0xkR1Z1WVc1MExYTnViM013CklCY05NalV3TmpBME1Ea3pOVE13V2hnUE1qQTFNakV3TVRrd09UTTFNekJhTUZzeEN6QUpCZ05WQkFZVEFrVlQKTVJvd0dBWURWUVFLREJGc2IyZG5hVzVuTFc5amJTMWhaR1J2YmpFYU1CZ0dBMVVFQ3d3UmJHOW5aMmx1WnkxdgpZMjB0WVdSa2IyNHhGREFTQmdOVkJBTU1DM1JsYm1GdWRDMXpibTl6TUlJQ0lqQU5CZ2txaGtpRzl3MEJBUUVGCkFBT0NBZzhBTUlJQ0NnS0NBZ0VBdkdyNG1PT0lrM0FVcVl1aklrRnlmYk1tUjViN2ZQQXNOa1Q1Zm1DVzZDdHYKME5qYWk5UUdjOXRHN1JTcG55VHJ3L0tjdFlRK0ZIQnE3Ym04MFE5OEhvYkNrNHBMK3Q3eUxWOVVJUDNDSGxqcwpDcC84empwVTd4Smo0U1RQenpKeDZJdEczemZ0RGt4SWhFLzRTVzlacjBLcXdQdmhiam8xekFuSnlDNkVVUUo3CmV6UmNqeHIwbGluZVNpcGdhZGd2YXE4a2FSbnQvbWJlT1BvWVU3anpWb0VZeDdlajVucVV3bVF3UFg1MDBIcnIKOVpGb0gzNEVqS0JncEl5L1g2OFJoSFBjL3pOZTBzU2ZOMFhKL0tBRUtEdlRzTjRVSTFFRHB2ZnVTV0t6SVNweQp0VzNUTTlidzE2L3h4Uk9rSkxOMGs5TFNrZnY1TXJodkJacVhKR3ZCUUNPZ1ZlOFpqR2YzZ1Vod0pDMVFLcVppCmJqeVF3OWNzU0dTODhlUVB6V003R3B5L2VRcEFEQi9lWlAzeTdLNGdqa2JpVkFOd0tkQmo4bXhGMU1WSTd0ZnMKZzlYamU5OG9Yc2d1SnlORGhtTDJnRjk3bC9VZXFya25JcDRmTDJ1b3F5MkppUDJEU1lvdU5QaGszNUh4czA2UAovb1pPbjFlTVp2OHBSTUtWQmhjWVMyV3Vob0dKQ1JTVDc3ZXV2bS9mM0loWmxiZC83YUhhNURXMm9aYURHZTgyCkIzVUlvYjB2UUZ4UWd2YVZuUHNockQyMElzQWJoNmIvUXJCd2lUT2ZUMkNPVmNwVWRRdFMxVFJUY3ljNndGWmUKNmxueHZBcWtBNVEvQkdKZHgrdjBxRW1XY1AzQlRqNHF4QTVFTWw4blh6ZjBPc2k0VGNncGszT0tCVnJLWm1FQwpBd0VBQWFPQnJqQ0JxekFkQmdOVkhRNEVGZ1FVTnpGUW5McXY3dG1nRHl5MGlDUWkxSkpYVjU0d0RBWURWUjBUCkFRSC9CQUl3QURBT0JnTlZIUThCQWY4RUJBTUNBcVF3SUFZRFZSMGxBUUgvQkJZd0ZBWUlLd1lCQlFVSEF3RUcKQ0NzR0FRVUZCd01DTUNrR0ExVWRFUVFpTUNDQ0htbHVjM1JoYm1ObExtOXdaVzV6YUdsbWRDMXNiMmRuYVc1bgpMbk4yWXpBZkJnTlZIU01FR0RBV2dCUVJmeUliRDJkOENScERIT3U0UG9GVDc3V2luakFOQmdrcWhraUc5dzBCCkFRc0ZBQU9DQWdFQVlpWXVoNVRqUndqa3F3V3kvbHRpUEJTR3AvL0lqT2RQdy9GMlRFblJYOVllZWd6a0t6dDAKSW9kSUd4bHVtVG9KK1RFejNKRXRhMFBWbzErM2dXOGxRSlFWZ1RIWkRhTzczQUVwZE94ZGM3TVlCRHU1RXJGdQpDYjNWYlBuTnR0dDd5dHN0R0o3cGJtcVFRK21hRmlEbEhUSjNCT2hUMk92NWhqV1A2M1Fua3RrdjdaenRVQTBECldNRE1HSllDaThmRG9ha3QzVmJua2REdDQ2b05kL0NBMFNKOWhGaWhZUjYwNkRtQ2RLRzNoZElGYXNxTGJENVoKMGFrdG1lVTk0NnJnVzN3b1YvL0hjRUV6dHIzeGt2Q3NOWFNQTDR6MGRaTlBZb09pVUJWRmsrUVo1cWV5SXZDdgpzVU5uU0cxbmFESWRXeXk2RnArWThJYTBJTWVhU1FQOCtHbHdld1A2eGorcFl0Qm44ekVRSG5jc1pZU1FMb082CktXbUlVam1yMHlPbS84enQzQkJOVXBqZ0NhcnVPbk1WRnhOL2tJUFNlRmtmYkJVTk9zdjdOL0hUTEdrVld2U3AKV0dLQm8xS0FITVZJMWpLWUUwazRiS0VpbE5iTGVpenlkNHRJMEErZDh5bWhKRys4dnE0Mmgwb2tZOVUyTzBBRQozMW1EV1VNcW1vYW5xZm4vQ3ZqYTFTeFB4K1F3aTFkQ0Y4cWo1RWJDQXphWTJ4TTE0Z1FYMER5Y1crNXZyU0llCmMxaWdBWnZodEFkSVBiV2VkUEFNd2FsaGJvdlhpMkJQYWh1V0VkeVRQRXJBRVdmOE9jNVo2MU1mVy8yL3hXbWoKVHNMYnRRVENCMjFDYm9mRWlJMHkyNWtNMzRsZWx3NjVObjA5Kzl5Y3hrQzQxaFZ5RVNnMkMvOD0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

tls.key: LS0tLS1CRUdJTiBQUklWQVRFIEtFWS0tLS0tCk1JSUpRZ0lCQURBTkJna3Foa2lHOXcwQkFRRUZBQVNDQ1N3d2dna29BZ0VBQW9JQ0FRQzhhdmlZNDRpVGNCU3AKaTZNaVFYSjlzeVpIbHZ0ODhDdzJSUGwrWUpib0syL1EyTnFMMUFaejIwYnRGS21mSk92RDhweTFoRDRVY0dydAp1YnpSRDN3ZWhzS1Rpa3Y2M3ZJdFgxUWcvY0llV093S24vek9PbFR2RW1QaEpNL1BNbkhvaTBiZk4rME9URWlFClQvaEpiMW12UXFyQSsrRnVPalhNQ2NuSUxvUlJBbnQ3TkZ5UEd2U1dLZDVLS21CcDJDOXFyeVJwR2UzK1p0NDQKK2hoVHVQTldnUmpIdDZQbWVwVENaREE5Zm5UUWV1djFrV2dmZmdTTW9HQ2tqTDlmcnhHRWM5ei9NMTdTeEo4MwpSY244b0FRb085T3czaFFqVVFPbTkrNUpZck1oS25LMWJkTXoxdkRYci9IRkU2UWtzM1NUMHRLUisva3l1RzhGCm1wY2thOEZBSTZCVjd4bU1aL2VCU0hBa0xWQXFwbUp1UEpERDF5eElaTHp4NUEvTll6c2FuTDk1Q2tBTUg5NWsKL2ZMc3JpQ09SdUpVQTNBcDBHUHliRVhVeFVqdTEreUQxZU43M3loZXlDNG5JME9HWXZhQVgzdVg5UjZxdVNjaQpuaDh2YTZpckxZbUkvWU5KaWk0MCtHVGZrZkd6VG8vK2hrNmZWNHhtL3lsRXdwVUdGeGhMWmE2R2dZa0pGSlB2CnQ2NitiOS9jaUZtVnQzL3RvZHJrTmJhaGxvTVo3ellIZFFpaHZTOUFYRkNDOXBXYyt5R3NQYlFpd0J1SHB2OUMKc0hDSk01OVBZSTVWeWxSMUMxTFZORk56SnpyQVZsN3FXZkc4Q3FRRGxEOEVZbDNINi9Tb1NaWncvY0ZPUGlyRQpEa1F5WHlkZk4vUTZ5TGhOeUNtVGM0b0ZXc3BtWVFJREFRQUJBb0lDQURWVVc2KytleG9zRjVVVGdtV0FPNzByClYxTGMvNnFSdWhuVU5QL1pxK3pqMm42MDJrckloTmtIQUJDN2ovVU0rTFJaOTVRQzdhVlFXbHVWL2tUNENvd0QKWFpCd0RPaGhjUTk1azNEUkVrQVBzQ09qdStUTkt0d09Ddm9mTnJoeEVUK2VLRDJtOFRCaVZBWXJNbDNxcCtwTQp4dExmbUNOZ1UzakFibjM3K0pTTFROTUc4NG5IdWVIRTBQZVIvZjhIWXdoaHNUOFVTVUgvOExjVXhvenY1T1FmCm54bHNOM1pWVE1TbW9lYk40NWRjcTJ1eXI5TjJFaWlSZmprazQwZmNYK3RxOWVxL3FmK2pDbU9WRzhJbXNuYUcKMUhpVHF3U0IralFvcWFmWXlWWENVM3haclBWWHlZeHE3dFgycExlRW1hTDdnWUV6WGduR25SbnJjd3NxZXFSRgpxL1VBRy9tcEVaNlNScEJaVWFpWXN5Q0xUb2RmYkY1eFdCME9SV21oMFp3WDJjcExDRlI3SXRJQTZEMVJ3cEJBCnQ0ZnRnTUlOZnNOZlpmTHhRaFFYZkZCUUhicU1PaVJWYnV3UEovdjBoV21yL3hTRlVldkdYZG5vTUlIcHR1dmsKMDB5cVYwVG1zeWhleThiMTRoUmlJT3M5UmFmRGJXWmhXbEMrVzZnN3FyTnJYWG9iQXpqSHVydjRweXhyY3I0WQp1R3B4alpha0NCUWRZbDMxVXV6Sy9pc2ZxRlRjV1lmZ0wycHpDWGdubWF1SXFNREFocXp6QnhmZXUzQlJhS0w4CmF5WHZYNVY2bzNxV0YxUGtVd1d4ZEc4UDVVME03dWg5N3Zzamxldml4dmFFaUZJSW9oRk1iM1p0ZW1seHgvcnYKWXFYTkhBQ2t1TmgxcTRRWlZWU3ZBb0lCQVFEcjdtRjZQcFcrako1d0g4dlNYUUtxdjY5dDFpOVpFT1BPWFpmaQo3c0FFSWUzZjljUWFzcXBwaHpFZWFERG4yWDhGY3ZtTUE2R1p0b1BMTTd5UTdmTXh4VXJhRmZPNE55TTQxVjdYClRPc04zazlBM0NWMGhwVWFmSzNZLzdaeWp6WG54ZUNEd2owd3NaT0lVSVFyVk9aK3BEVDE1Nlo0M1hSaVJpUmIKRnZ4R09mOGRnRjJ1QUxjYUtGUU1SQ0l4RXFRZVJpenZqWFNmZlBjc2R5T2FpaXV4VEZISUZReHE0R0wvT0prLwp2bGhjTFBEYnA4bSs0QUpoVnZlc3hUSm5jUFE1K0tOOTZkNVNmeVMvQW1ySHdjVGdyTllhZm5sMUFWc2ZOT21pCmhaQXVEbFpxR0dRMFMwaStJdUVvNDRsb3orRTVpeStZRTBjbHhaOTE4bld1b3c3ekFvSUJBUURNY2ZHajRkdzUKdkplc1FLaWZmQ3c4VDRQOUxMN2dPS09PMmFaVStodCtWeHVKQUdsZkRoYTdmYm5YcUlOQ2doSjEzK0NLdU5LZgo1ZTJSVnhFWjRNanZ5OUpxakRXNkRySmU5dFNlcjNSaTJ4ZXpHTEhuczVUYWN4eFhFWm5wcWxod1lyK01mVjlFCkQyTjNLRWd4MUpLZjZ0UjhINDFNS2V4aW1LdFpkVXRSUkJzcDRjQkttL0RxeFJqcUYxNS9sTFI4TFBCUU5jTmsKL2ZrYkJGWXhrQnh4Q0RPQU92S1NYNWFhZUF4Wko0OGhqbkhWV2RQRVdBaWJETWtvTmJoWU11ZW9NM2h6b2FLLwpGRTFUU3FTamVJcFhSUTc5OTRCTWlGT3h0V29JNHBVbWlJdWpCTjdCTVdCdkxTRkZJV2xZSkprcmJZRjNtUXNSCmQ1d25qQkY0M1JKYkFvSUJBRlhWaFNzM1I3MXFaVjMvZzJUR0orazlFYkxSSUtxenBWMTMyWUZiUVFwLzJZNEkKV004cHZ5dmpYbkJra1o1WUY0bEEraDhCVnpLWUh6eTNWdHdYWmNudXNEdkZqc1N2Y3FZRG9weUx4RnhvUzhjYQpFSnBqT0MzSnZHbmRKbUJwVDhCQjBsdTlPQXZXSHdtUjJYUDJVR0UwbG52OFNpbGcwQzNNdlA5U3puY3lOR2xrClFUREQyOW10WUY2U0R5cHhVTG9lNTh4RFYzR0t3bFl3QmdqOFNjY1lNQXl0ODdXU2F4SFZZcE81U1daSGgxMHkKbndoTmNUQSt0cDdwbzF2VTBWV2g2c0V0YTQveDU4bUNOSnoyRncxeWMvWnhtdmlCaE1oR3ROVkc2RnlKSk5FMgpqSVlsK1pJTEdJV0t1bndpWFJ0VlV2eHR6dzJqNTA2KzVpZWg5UmNDZ2dFQVNqdUZOYkFvdW40VHhHT2wxbUxMCjNRb3lMcGMwcDAxcGRkRHBhQ2w0R2lPZXg5dnlacVZDODhqdTFiTkdGYndNMytmdUsvQjM2YjhleDRzSmxvL2IKNWRYb0RPL2tBaTZiN1lkS0pHUW9xa3hMQ3FpSTBFeVFXOUU0RlJVN0FYRHNzOEhuTXlmQ2szL1M2YzBpaVpWWgp0OThZVUFsVTBMYllNZVNsTXRJNENzWGo1dzBsT1BIdVJCQlV2NHJFc1NaWmNrME81TkRncGFiaDhFRmUycGdzCis0Mnl4WGloNHl2NkR4UlB4MzlwcFJHSG02UUdGR0N5bnpuQlFHeGhCd1ZVditvUWJrdmVQK3NyT0hiOTJLMUgKN2ZBUlJYMjhoQTFyOWphY3phVVg2dW5oYWN1MjVnYjdzT0orRmcvUHBFV0ZxQk1XMDBvcWpxa1RkZmlSejRUVgp5UUtDQVFFQTVIenM1ZEV6VnBtWE1rb0p6WHh2OGtrMGtVTzZURjhOSTlrL3hncGRsZEJVZitQWTdNOUhCR1JuCmVrTXZjT1hkdlRWRHpTTlovZ3diOEdham5zSTQ4YTE4SXNSbjlOT1JEZTBrVnJmbUE3c2FNNWhQOXVWcnFPcjAKM2RSU2RycTJZc2xucndNUEMwMTFVaG83ZnFBZE55R1l1N201OGwyUXRGYnJtL1ptSlArT2pvMVQwaG00UERDVQpjT2lmeHVwdHVUU3RqK2JqVjBVT0gxY2x5czltRi9Dcjd3c0ttVWt4TENKRDg5a3ErZW53THVZUkpqdWtDNjJxCkJ4Qy9LYVRmVGpXUVNOSmtGc1J5SUNkUDBTOXZ0SWhndXYvV1hRNXJ0VjI5N2tlQkdIT1pjZUJIOVJ1TTZiNUYKVFFlL2ltSytkYlNUeW9hRjR4TnVYMHV1ZkRyZjVBPT0KLS0tLS1FTkQgUFJJVkFURSBLRVktLS0tLQo=

EOFModify the kustomization.yaml inside the site-policies folder so that it includes this new PolicyGenerator manifest which will be eventually applied by ArgoCD.

cat <<EOF > ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/v4.19/kustomization.yaml

---

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

generators:

- common-419.yaml

- group-du-sno.yaml

- sites-specific.yaml

- requirements-upgrade.yaml

# - group-du-sno-validator.yaml

configMapGenerator:

- files:

- source-crs/reference-crs/ibu/PlatformBackupRestoreWithIBGU.yaml

- source-crs/reference-crs/ibu/PlatformBackupRestoreLvms.yaml

name: oadp-cm

namespace: openshift-adp

generatorOptions:

disableNameSuffixHash: true

resources:

- group-hardware-types-configmap.yaml

- site-data-hw-1-configmap.yaml

- ns-oadp.yaml

- extra-manifests.yaml

patches:

- target:

group: policy.open-cluster-management.io

version: v1

kind: Policy

name: requirements-upgrade-extra-manifests

patch: |-

- op: replace

path: /spec/policy-templates/0/objectDefinition/spec/object-templates/0/objectDefinition/data

value: '{{hub copyConfigMapData "ztp-policies" "disconnected-ran-config" hub}}'

EOFThen commit all the changes:

cd ~/5g-deployment-lab/ztp-repository/site-policies

git add *

git commit -m "adds upgrade policy"

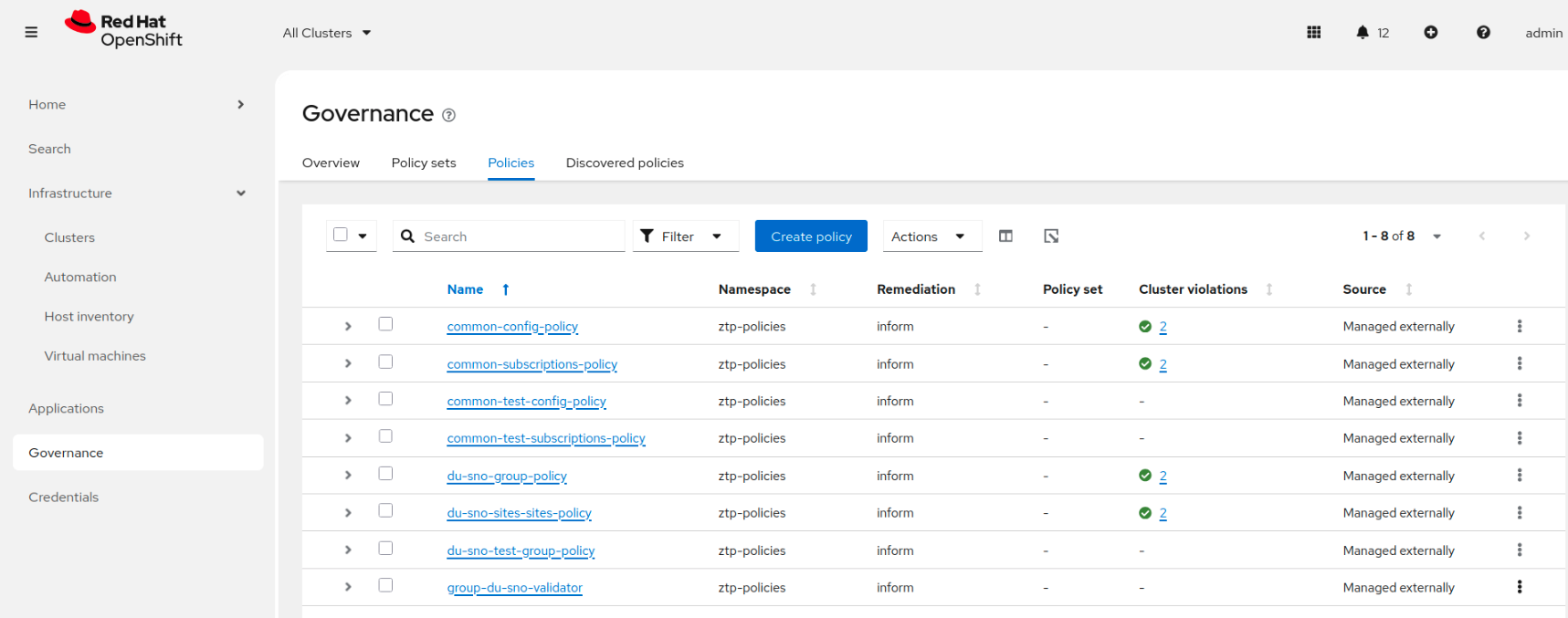

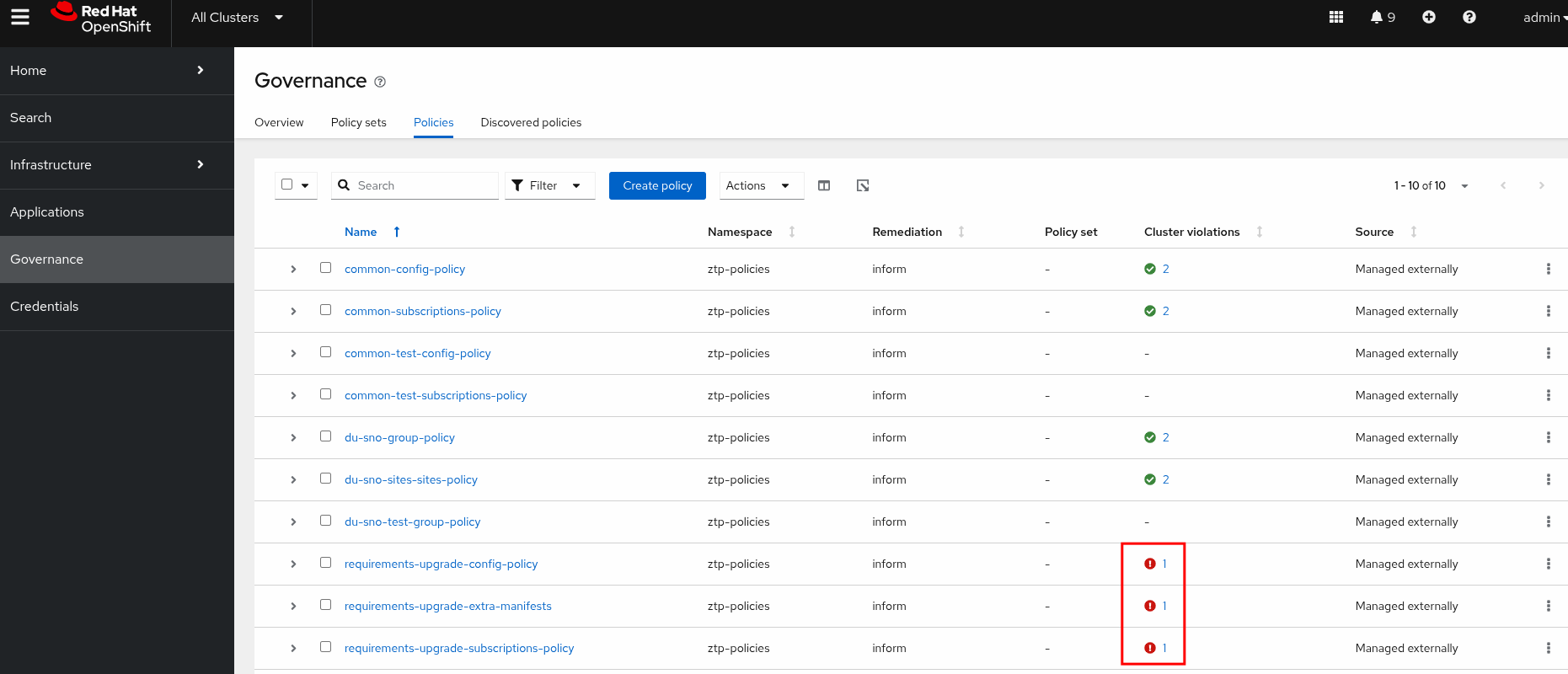

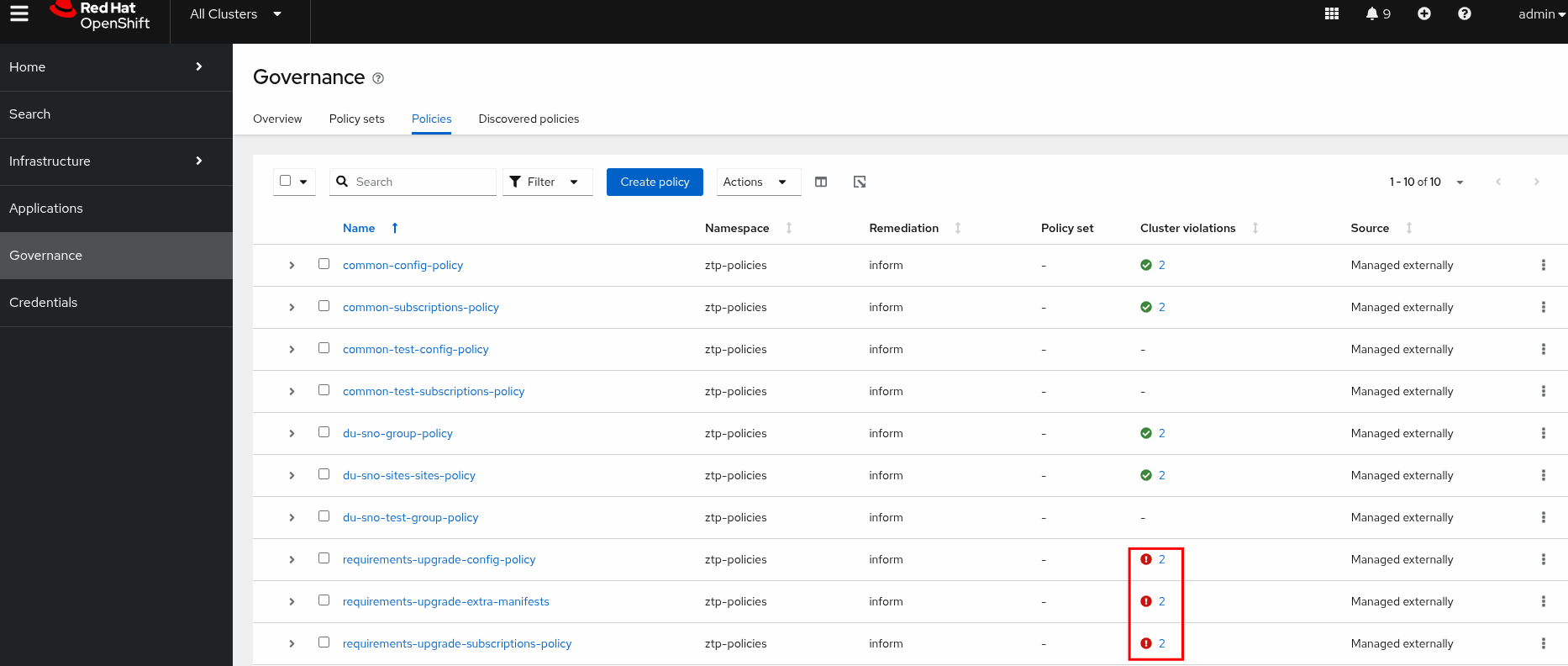

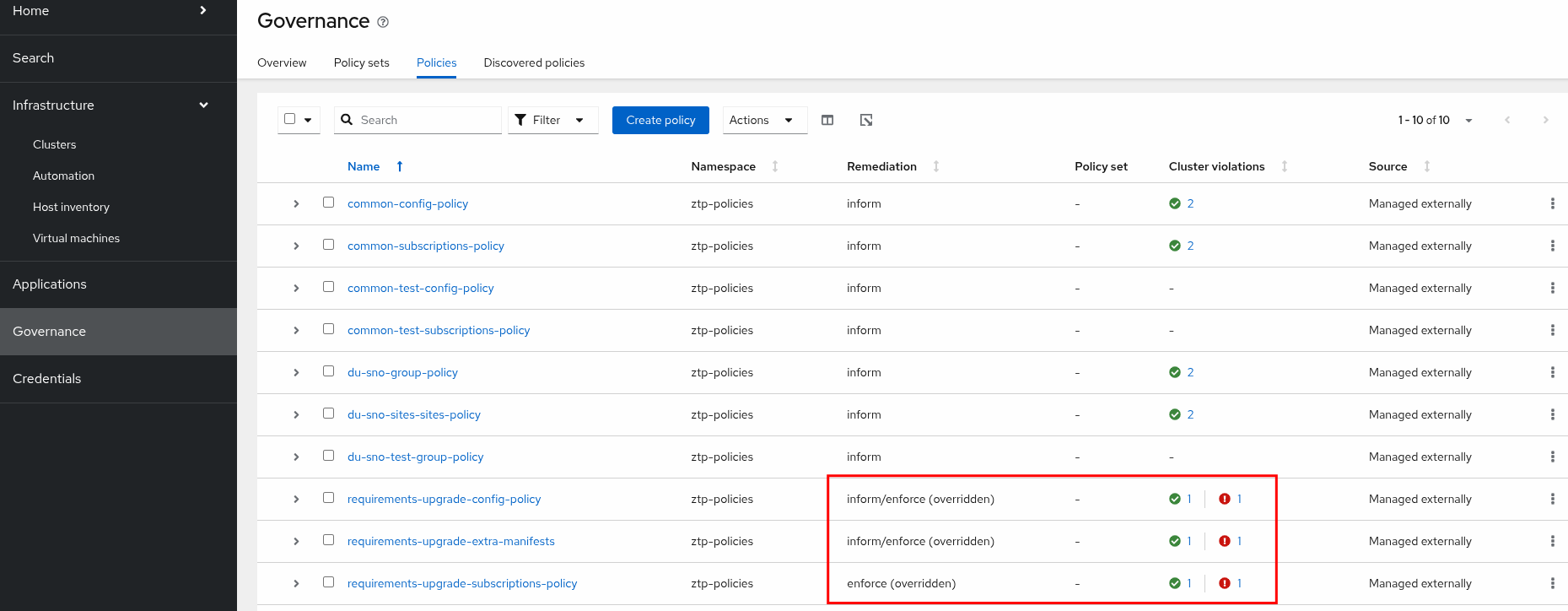

git push origin mainArgoCD will automatically synchronize the new policies and show them as non-compliant in the RHACM WebUI:

However, notice that only one cluster is not compliant. If we check the policy information, we will see that this policy is only targeting the sno-abi cluster. This is because the sno-ibi cluster does not have the labels du-zone=europe that the PGT is targeting in its binding rule.

Let’s add the proper labels to the sno-ibi SNO cluster:

oc --kubeconfig ~/hub-kubeconfig label managedcluster sno-ibi du-zone=europe

At this point, we need to create a Cluster Group Upgrade (CGU) resource that will start the preparation for the upgrade process.

cat <<EOF | oc --kubeconfig ~/hub-kubeconfig apply -f -

---

apiVersion: ran.openshift.io/v1alpha1

kind: ClusterGroupUpgrade

metadata:

name: requirements-upgrade

namespace: ztp-policies

spec:

clusters:

- sno-abi

- sno-ibi

managedPolicies:

- requirements-upgrade-subscriptions-policy

- requirements-upgrade-config-policy

- requirements-upgrade-extra-manifests

remediationStrategy:

maxConcurrency: 2

timeout: 240

EOFAs explained, the CGU enforces the recently created policies.

We can monitor the remediation process using the command line as well:

oc --kubeconfig ~/hub-kubeconfig get cgu -n ztp-policiesNAMESPACE NAME AGE STATE DETAILS

ztp-policies requirements-upgrade 35s InProgress Remediating non-compliant policiesIn a few minutes, we will see a similar output as the one described below:

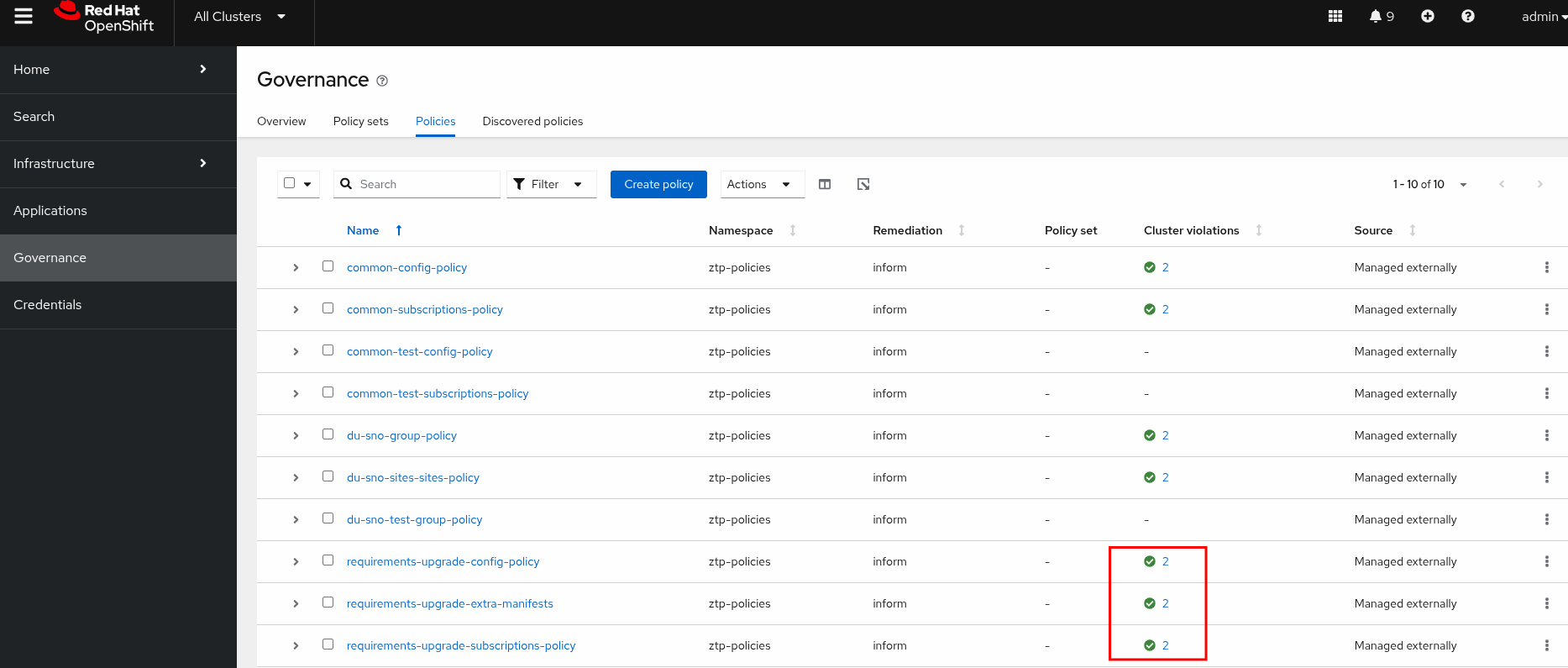

NAMESPACE NAME AGE STATE DETAILS

ztp-policies requirements-upgrade 2m52s Completed All clusters are compliant with all the managed policies

Finally, once the upgrade policy is applied successfully, we can remove it to prevent continuous compliance checks against our SNO clusters, which would otherwise consume valuable hub cluster resources (CPU and memory cycles). Do not forget to commit the changes:

sed -i "s|- requirements-upgrade.yaml|#- requirements-upgrade.yaml|" ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/v4.19/kustomization.yaml

cd ~/5g-deployment-lab/ztp-repository/site-policies

git add ~/5g-deployment-lab/ztp-repository/site-policies/fleet/active/v4.19/kustomization.yaml

git commit -m "Removes upgrade policy once it is applied"

git push origin mainArgoCD will automatically synchronize the new changes and remove them from the RHACM WebUI.

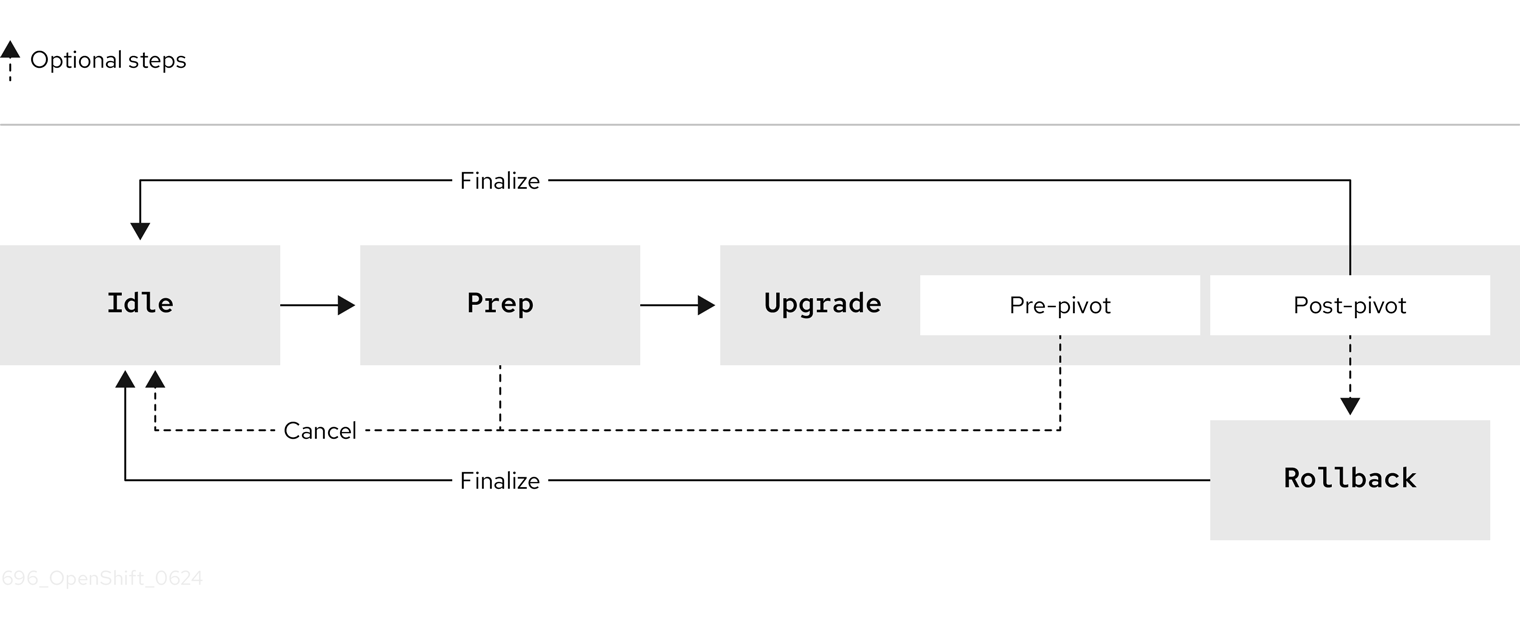

Creating the Image Based Group Upgrade

The ImageBasedGroupUpgrade (IBGU) CR combines the ImageBasedUpgrade and ClusterGroupUpgrade APIs. It simplifies the upgrade process by using a single resource on the hub cluster—the ImageBasedGroupUpgrade custom resource (CR)—to manage an image-based upgrade on a selected group of managed clusters throughout all stages. A detailed view of the different upgrade stages driven by the LCA operator is explained in the Image Based Upgrades section.

Let’s apply the IBGU and start the image-based upgrade process in both clusters simultaneously:

Note how we can include extra install configurations (extraManifests) and backup/restore steps (oadpContent) within the same custom resource.

|

cat <<EOF | oc --kubeconfig ~/hub-kubeconfig apply -f -

---

apiVersion: v1

kind: Secret

metadata:

name: disconnected-registry-pull-secret

namespace: default

stringData:

.dockerconfigjson: '{"auths":{"infra.5g-deployment.lab:8443":{"auth":"YWRtaW46cjNkaDR0MSE="}}}'

type: kubernetes.io/dockerconfigjson

---

apiVersion: lcm.openshift.io/v1alpha1

kind: ImageBasedGroupUpgrade

metadata:

name: telco5g-lab

namespace: default

spec:

clusterLabelSelectors:

- matchExpressions:

- key: name

operator: In

values:

- sno-abi

- sno-ibi

ibuSpec:

seedImageRef:

image: infra.5g-deployment.lab:8443/rhsysdeseng/lab5gran:v4.19.1

version: 4.19.1

pullSecretRef:

name: disconnected-registry-pull-secret

extraManifests:

- name: disconnected-ran-config

namespace: openshift-lifecycle-agent

oadpContent:

- name: oadp-cm

namespace: openshift-adp

plan:

- actions: ["Prep", "Upgrade", "FinalizeUpgrade"]

rolloutStrategy:

maxConcurrency: 10

timeout: 2400

EOFCheck that an IBGU object has been created in the hub cluster, along with an associated CGU:

oc --kubeconfig ~/hub-kubeconfig get ibgu,cgu -n defaultNAMESPACE NAME AGE

default imagebasedgroupupgrade.lcm.openshift.io/telco5g-lab 102s

NAMESPACE NAME AGE STATE DETAILS

default clustergroupupgrade.ran.openshift.io/telco5g-lab-prep-upgrade-finalizeupgrade-0 102s InProgress Rolling out manifestworksMonitoring the Image Based Group Upgrade

As explained in the Lifecycle Agent Operator (LCA) section, the SNO cluster will progress through these stages:

The progress of the upgrade can be tracked by checking the status field of the IBGU object:

oc --kubeconfig ~/hub-kubeconfig get ibgu -n default telco5g-lab -ojson | jq .status{

"clusters": [

{

"currentAction": {

"action": "Prep"

},

"name": "sno-abi"

},

{

"currentAction": {

"action": "Prep"

},

"name": "sno-ibi"

}

],

"conditions": [

{

"lastTransitionTime": "2025-02-13T09:27:47Z",

"message": "Waiting for plan step 0 to be completed",

"reason": "InProgress",

"status": "True",

"type": "Progressing"

}

],

"observedGeneration": 1

}The output shows that the upgrade has just started because both sno-abi and sno-ibi are in the Prep stage.

We can also monitor progress by connecting directly to the managed clusters and obtaining the status of the IBU resource:

The Prep stage is the current stage, as indicated by the Desired Stage field. The Details field provides extra information; in this case, it indicates preparation to copy the stateroot seed image.

|

During the Prep stage, the clusters will start pulling images and performing I/O intensive operations to copy the new stateroot seed image. This can put significant pressure on the hypervisor, potentially causing temporary loss of connectivity to cluster APIs multiple times for about 10 minutes.

|

oc --kubeconfig ~/abi-cluster-kubeconfig get ibuNAME AGE DESIRED STAGE STATE DETAILS

upgrade 36s Prep InProgress Stateroot setup job in progress. job-name: lca-prep-stateroot-setup, job-namespace: openshift-lifecycle-agentOnce the Prep stage is complete, the IBGU object will automatically move to the Upgrade stage. The output below shows that both SNO clusters have completed the Prep stage (completedActions) and their currentAction is Upgrade.

oc --kubeconfig ~/hub-kubeconfig get ibgu -n default telco5g-lab -ojson | jq .status{

"clusters": [

{

"completedActions": [

{

"action": "Prep"

}

],

"currentAction": {

"action": "Upgrade"

},

"name": "sno-abi"

},

{

"completedActions": [

{

"action": "Prep"

}

],

"currentAction": {

"action": "Upgrade"

},

"name": "sno-ibi"

}

],

"conditions": [

{

"lastTransitionTime": "2025-03-05T11:50:26Z",

"message": "Waiting for plan step 0 to be completed",

"reason": "InProgress",

"status": "True",

"type": "Progressing"

}

],

"observedGeneration": 1

}

At some point during the Upgrade stage, the SNO clusters will restart and boot from the new stateroot. Depending on the host, the process until the Kubernetes API is available again can take several minutes.

|

After some time, the SNO clusters will move to the FinalizeUpgrade stage. The output below shows that sno-ibi has moved to the FinalizeUpgrade stage, while sno-abi is still in the previous stage (Upgrade).

oc --kubeconfig ~/hub-kubeconfig get ibgu -n default telco5g-lab -ojson | jq .status{

"clusters": [

{

"completedActions": [

{

"action": "Prep"

}

],

"currentAction": {

"action": "Upgrade"

},

"name": "sno-abi"

},

{

"completedActions": [

{

"action": "Prep"

},

{

"action": "Upgrade"

}

],

"currentAction": {

"action": "FinalizeUpgrade"

},

"name": "sno-ibi"

}

],

"conditions": [

{

"lastTransitionTime": "2025-03-05T11:50:26Z",

"message": "Waiting for plan step 0 to be completed",

"reason": "InProgress",

"status": "True",

"type": "Progressing"

}

],

"observedGeneration": 1

}During the FinalizeUpgrade stage, the SNO clusters are restoring the data that was backed up. This includes cluster-specific configurations like ACM and LVM setups, which were defined in the disconnected-ran-config ConfigMap mentioned in the Preparing Managed Clusters for Upgrade section. Connecting to sno-ibi during this stage will show the restore process running.

oc --kubeconfig ~/ibi-cluster-kubeconfig get ibuNAME AGE DESIRED STAGE STATE DETAILS

upgrade 8m46s Upgrade InProgress Restore of Application Data is in progressFinally, both SNO clusters have moved to the FinalizeUpgrade stage:

oc --kubeconfig ~/hub-kubeconfig get ibgu -n default telco5g-lab -ojson | jq .status{

"clusters": [

{

"completedActions": [

{

"action": "Prep"

},

{

"action": "Upgrade"

},

{

"action": "FinalizeUpgrade"

}

],

"name": "sno-abi"

},

{

"completedActions": [

{

"action": "Prep"

},

{

"action": "Upgrade"

},

{

"action": "FinalizeUpgrade"

}

],

"name": "sno-ibi"

}

],

"conditions": [

{

"lastTransitionTime": "2025-02-13T09:47:57Z",

"message": "All plan steps are completed",

"reason": "Completed",

"status": "False",

"type": "Progressing"

}

],

"observedGeneration": 1

}We can also check that the CGU associated with the IBGU shows as Completed:

oc --kubeconfig ~/hub-kubeconfig get ibgu,cgu -n defaultNAMESPACE NAME AGE

default imagebasedgroupupgrade.lcm.openshift.io/telco5g-lab 22m

NAMESPACE NAME AGE STATE DETAILS

default clustergroupupgrade.ran.openshift.io/telco5g-lab-prep-upgrade-finalizeupgrade-0 22m Completed All manifestworks rolled out successfully on all clustersNote that once the upgrade is finished, the IBU CR from both clusters moves back to the initial state: Idle.

oc --kubeconfig ~/ibi-cluster-kubeconfig get ibuNAME AGE DESIRED STAGE STATE DETAILS

upgrade 9m37s Idle Idle Idle

Note the time it took to update the SNO clusters. As shown above, the sno-ibi took less than 10 minutes to run a z-stream update. Compared with the regular update time of around 40 minutes, the upgrade time reduction is significant.

|

As a final verification, check that both upgraded clusters comply with the Telco RAN DU reference configuration configuration and the OpenShift version showed is the Infrastructure tab is the expected one: