PolicyGen DeepDive

As we saw in an earlier section of the lab, we can leverage RHACM policies to get our clusters configured the way we want. We also introduced the PolicyGen component which helps us to create a set of policies based on pre-baked templates (source-crs) crafted by the Red Hat CNF team.

In this section, we are going to get into the implementation details of the PolicyGen tooling.

PolicyGen Implementation

The policy generator is implemented as a Kustomize generator plugin. This allows for any Kustomize configuration (e.g. patches, common labels) to be applied to the generated policies. This also means that the policy generator can be integrated into existing GitOps processes that support Kustomize, such as RHACM Application Lifecycle Management and OpenShift GitOps (i.e. ArgoCD).

There are two Kustomize plugins, we refer to them as PolicyGenTool, but each has its own name and mission:

-

SiteConfig Generator. Reads the SiteConfig manifest and generates the required Kubernetes manifests to run a RAN-enabled OpenShift deployment using RHACM. Code is located here.

-

Policy Generator. Reads the PolicyGenTemplate manifest and generates the required Kubernetes manifest to configure an RHACM Policy targeting a set of clusters. Code is located here.

Kustomize Plugins

As we already said, PolicyGenTool is a collection of Kustomize plugins. Kustomize plugins work as described below.

| When installing these tools in ArgoCD running on a hub cluster the configuration described in this section is handled automatically. The ArgoCD operator will find the plugin binaries and use them to generate CRs from SiteConfig and PolicyGenTemplates. This section is provided as background/reference. |

The user has a path configured in Kustomize for plugins (defaults to ~/.config/kustomize/plugin/), inside this path different folders are configured in a way that Kustomize can understand what plugin needs to be executed under which situation. Let’s explain this.

If we look at this kustomization.yaml:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

generators:

- ztp-sno-lab.yaml

- common-policies/common.yamlWe see that under generators section we have two yaml files, generators tell Kustomize that some plugin needs to be run to interpret these files. The way Kustomize knows which plugin needs to be executed is by looking at the GroupVersionKind (GVK) of the files. Following with above example we will see the following GVKs:

ztp-sno-lab.yaml

apiVersion: ran.openshift.io/v1

kind: SiteConfigcommon-policies/common.yaml

apiVersion: ran.openshift.io/v1

kind: PolicyGenTemplateAs we can see the group is ran.openshift.io, version is v1 and kind are SiteConfig and PolicyGenTemplate.

Earlier, we talked about the Kustomzie plugins path, and how it is required to have a proper folder structure so Kustomize knows which plugin needs to be executed. Using the examples above we can explain what Kustomize will do for generating these two files.

ztp-sno-lab.yaml

Kustomize will get the GVK and after that will go to the plugins path and execute the plugin binary, the path for the binary is $KUSTOMIZE_PLUGINS_PATH/<group>/<version>/<kind>/<pluginbinary>. In this case Kustomize will execute the binary at ~/.config/kustomize/plugin/ran.openshift.io/v1/siteconfig/SiteConfig.

common-policies/common.yaml

Kustomize will get the GVK and after that will go to the plugins path and execute the plugin binary, the path for the binary is $KUSTOMIZE_PLUGINS_PATH/<group>/<version>/<kind>/<pluginbinary>. In this case Kustomize will execute the binary at ~/.config/kustomize/plugin/ran.openshift.io/v1/policygentemplate/PolicyGenTemplate.

SiteConfigGenerator

The SiteConfigGenerator reads the SiteConfig manifest and uses the information provided in that file to output a set of manifests that will be used for running a cluster installation. The manifests templates can be found here.

Policy Generator

The PolicyGenerator reads the PolicyGenTemplate manifests and uses the information provided in these files to output a set of RHACM policies that will be used for different purposes, from day2ops to monitoring the cluster health. It makes use os templated policies that can be found here.

| A high level view of the installation and configuration workflow can be found in the previous section ZTP workflow. |

5G RAN Profile

The Red Hat CNF team has crafted specific configurations to improve the performance on clusters running RAN workloads. All the configurations described below are expected to be present in a cluster where RAN workloads are expected to run.

| Some of these configurations are configured at install time (through the use of extra-manifests during the installation) and others are configured through Policy automatically after the cluster joins the RHACM Hub. |

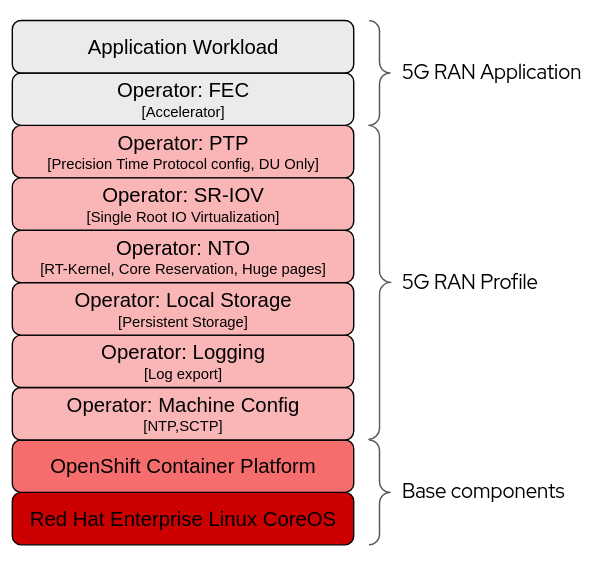

Above picture describes what areas are covered by the 5G Ran profile. Red Hat covers all layers but the two on the top which are part of the application domain. Below the different 5G RAN profile configurations are described.

A more in-detail explanation of the 5G RAN Profile is covered in this training material.

Workload Partitioning

Pins OCP platform, operating system processes and day2 operator Pods that are part of the DU profile to the reserved cpuset.

Kubelet Tuning

Reduce frequency of kubelet housekeeping and eviction monitoring to reduce CPU usage.

SCTP

Enable SCTP kernel module which is required by RAN applications but disabled by default in RHCOS.

Container Mount Hiding

In order to reduce system mount scanning overhead, creates a mount namespaces for container mounts visible to kubelet/CRI-O.

Recovery Optimization

Systemd service which will assign additional CPUs to systemd services during reboot recovery.

Monitoring Operator Config

Reduce footprint of the monitoring stack by:

-

Reducing the Prometheus retention period, metrics are already being aggregated at the RHACM hub.

-

Disable local Grafana and Alertmanager.

Console Operator

Since the DUs are being centrally managed from a RHACM hub, disable the local console to reduce resource consumption.

OperatorHub

A single catalog source is recommended that contains only the operators required for a RAN DU deployment.

PTP Operator

Configuration of the Precision Time Protocol. The DU can run in the following modes:

-

Ordinary clock sync to a GM or boundary clock.

-

In addition to the above optionally provide a boundary clock for RU.

This also includes an optional event notification service that applications can subscribe to for ptp events.

SR-IOV

Provision and configure the SR-IOV CNI and device plugin. Both netdevice (kernel VFs) and vfio (DPDK) are supported.

This will be customer specific.

Node Tuning Operator

Performance Tuning interface including:

-

Enables RT kernel.

-

Enables kubelet feature (cpu manager, topology manager, memory manager).

-

Configures huge pages.

-

Configures reserved and isolated cpusets.

-

Additional kernel args.

Local Storage

Enables creation of Persistent Volumes which can be consumed as PVCs by applications. This will be customer specific.

SiteConfig Templating

The SiteConfig templating is pretty straightforward, whatever information we put into our SiteConfig manifest will be translated to the required manifests to initiate the cluster installation. You can find a SiteConfig example here.

Policies Templating

Policies templating is a bit different, we have a set of base configuration CRs, known as source-crs, that we can use. This set of CRs can be found here.

If you access this link you will see lots of files, like for example:

-

AcceleratorNS.yaml

-

AmqInstance.yaml

-

etc.

These CRs are referenced (by filename) from the PolicyGenTemplate CRs in Git. The PolicyGenTemplate CRs act as a manifest of which configuration CRs should be included and how they should be combined into Policy CRs. In addition, the PolicyGenTemplate can contain an "overlay" (or patch) that is applied to the CR before it is wrapped into the Policy.

The way this works is a follows. We will have one or more PolicyGenTemplates where we will reference these base configuration CRs. We can see an example here.

Let’s use the following PolicyGenTemplate as example.

apiVersion: ran.openshift.io/v1

kind: PolicyGenTemplate

metadata:

name: "test"

namespace: "test"

spec:

bindingRules:

test: "true"

mcp: "master"

sourceFiles:

- fileName: SriovOperatorConfig.yaml

policyName: "config-policy"

- fileName: PerformanceProfile.yaml

policyName: "config-policy"

spec:

cpu:

isolated: "2-19,22-39"

reserved: "0-1,20-21"

hugepages:

defaultHugepagesSize: 1G

pages:

- size: 1G

count: 32We can see that the PolicyGenTemplate is targeting two base configuration CRs: SriovOperatorConfig.yaml and PerformanceProfile.yaml.

The first source CR, SriovOperatorConfig.yaml, is not overriding any settings, we can tell this because we don’t have a spec or metadata section below the policyName. That means that the final policy will include the configuration CR without modification ( base SriovOperatorConfig configuration CR).

The second source CR, PerformanceProfile.yaml, is overriding some parameters in the spec. That means that the final policy will be configured as a merge of the original CR plus the custom overrides we provided in the PolicyGenTemplate.

Inside the templates we can often see references to what may appear variables like $size, $count, etc. These are not vars, that actually tells the policyGen plugin to remove that line from the output if no value was provided as an override. The only variable that is referenced and is actually substituted is the $mcp variable that will be replaced with the value of the same property defined in the PolicyGenTemplate.

|

Running Kustomize Plugins Locally

We have described how Argo CD makes use of the PolicyGenTool plugins to generate the required manifests for our 5G RAN deployments. In this section, we are going to cover how to run these plugins locally which can be useful while troubleshooting or getting a preview of what the files will look like when generated via Argo CD.

By running the steps below we will get the plugins installed in our system. Kustomize should be already installed, you can get it from the releases page.

mkdir -p ~/.config/kustomize/plugin

podman cp $(podman create --name policgentool --rm registry.redhat.io/openshift4/ztp-site-generate-rhel8:v4.12.3-3):/kustomize/plugin/ran.openshift.io ~/.config/kustomize/plugin/

podman rm -f policgentoolNow that we have the plugins locally we can run them:

We need to enable the alpha plugins by using the parameter --enable-alpha-plugins

|

kustomize build site-configs/ --enable-alpha-pluginsWe will get the output (manifests that will be consumed by the RHACM Hub).

apiVersion: v1

kind: Namespace

metadata:

annotations:

argocd.argoproj.io/sync-wave: "0"

ran.openshift.io/ztp-gitops-generated: '{}'

labels:

name: ztp-sno

name: ztp-sno

---

apiVersion: v1

data:

03-workload-partitioning.yaml: |

apiVersion: machineconfiguration.openshift.io/v1

kind: MachineConfig

metadata:

annotations:

<OUTPUT_OMITTED>